Preface

What is this book?

This book is a guide about:

-

Software delivery: how to run & maintain software in production?

-

DevOps: a methodology to make software delivery more efficient

Instead of focus on culture & theory, this book focuses on hand-ons guide:

- Step-by-step examples about how to run real system & real code.

- Core concepts & best practices of modern DevOps and software delivery.

Why this book exists?

-

There is no hands-on guide that teach software delivery end-to-end.

-

Software delivery is current learned in the hard way - through trial and error - which can be very costly (outages, data lose, security breaches…)

note

The author learned from his experience when he worked at LinkedIn in 2011:

- LinkedIn’d just IPO, share price was up 100%, revenue was growing 100% by year, 100M members, growing fast.

- From the inside, the company was in turmoil because of software delivery - a $10 billion company could not deploy code:

- They deployed once every 2 weeks through a painful, tedious, slow, error-prone way

- A deployment went so bad, that it could not be completed; new changes, some fixes, more issues…Team worked overnight several days, then everything was roll-backed.

- They kicked of Project Inversion:

- new features development was freezed for several months

- the entire engineering, product, design team reworked all the infrastructure, tooling, technique

- Months later, they could deploy dozens of times per day:

- with fewer issues, outages

- allowing the whole company move much faster

note

How did they do that?

-

They didn’t know what they didn’t know

-

They learn about best practices from the industry:

- Trunk-based development (from one company)

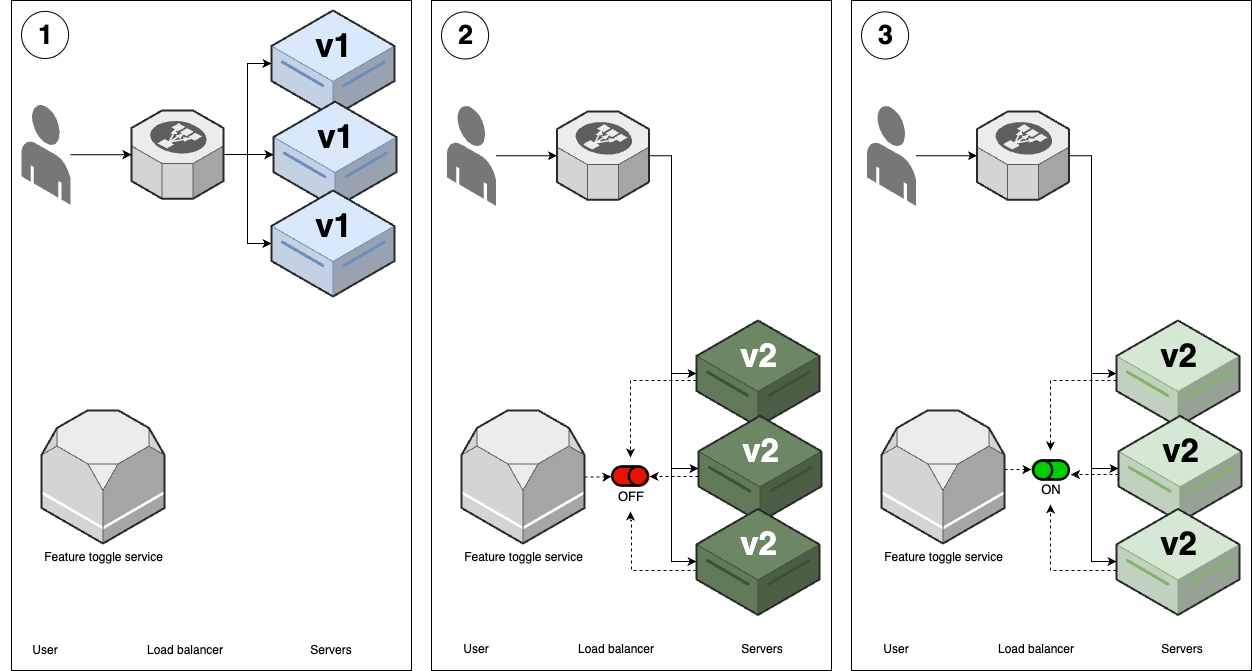

- Canary deployment (from another)

- Feature toggles (from another)

- …

-

Most developers don’t know what they don’t know:

- About software delivery and DevOps

- Best practices that top tech companies had figured out

-

This book helps you learn from the experience of others so you can build software faster, more reliably and more securely.

warning

The results from adopting DevOps can be wonderful, but the experience along the way may be not.

Watch out for snakes

-

“DevOps” is used to describe a lot of unrelated concepts. ⛓️💥🌕🌑

e.g. A TLS certificate (& the cryptography behind it), a deployment pipeline, and backing up data from a database.

note

What makes DevOps hard? (It’s not the complexity of the concepts)

- It’s the number of concepts to master (DevOps is an incredibly broad surface area)

- It’s how to get everything connected together correctly (or nothing works at all)

-

“DevOps” is a box of cables. 🧰⛓️

You pull out a cable but end up with a giant mess where everything is tangled together

tip

This book try to untangle this mess of cables:

- Each cable in that mess is in fact a separate cable.

- In isolation, each concept in DevOps (a cable in that mess) is within your grasp.

-

Sometimes, DevOps even feels like a box of snakes. 🧰🐍🐍

You pull of a cable but end up getting bitten.

caution

DevOps is current a giant mess:

- A new industry

- Tools, techniques aren’t mature

- It often feels like everything is broken, frustrating & hopelessly tangled

-

In DevOps, each time you learn a new buzzword (a new concept):

- it comes with 10 more unfamiliar buzzwords (it’s a mess of cables)

- or it might try to by you (a cable or a snake)

but stick with it & watch for the snake

Who should read this book?

Anyone responsible for deploying & managing apps in production:

-

Individual contributors in operations roles: SREs, DevOps Engineers, SysAdmins…, who wants to level up about software delivery & DevOps.

-

Individual contributors in development roles: Software Engineers/Developers.., who wants to learn about the operations side.

-

Managers: Engineering Managers, CTOs…, who want to adopt DevOps & software delivery best practices in their organizations.

What is in this book?

| Chapter | Key ideas | Hand-ons example |

|---|---|---|

| 1. An Introduction to DevOps & Software Delivery | The evolution of DevOps. | |

| On-prem vs cloud. | ||

| PaaS vs IaaS. | - Run an app on Fly.io. - Run an app an EC2 instance in AWS. | |

| 2. How to Manage Infrastructure as Code | Ad hoc scripts. | Use Bash to deploy an EC2 instance. |

| Configuration management tools. | Use Ansible to deploy an EC2 instance. | |

| Server templating tools. | Use Packer to build an AMI. | |

| Provisioning tools. | Use OpenTofu to deploy an EC2 instance. | |

| 3. How to Deploy Many Apps: Orchestration, VMs, Containers, and Serverless | Server orchestration. | Use Ansible to deploy app servers & Nginx. |

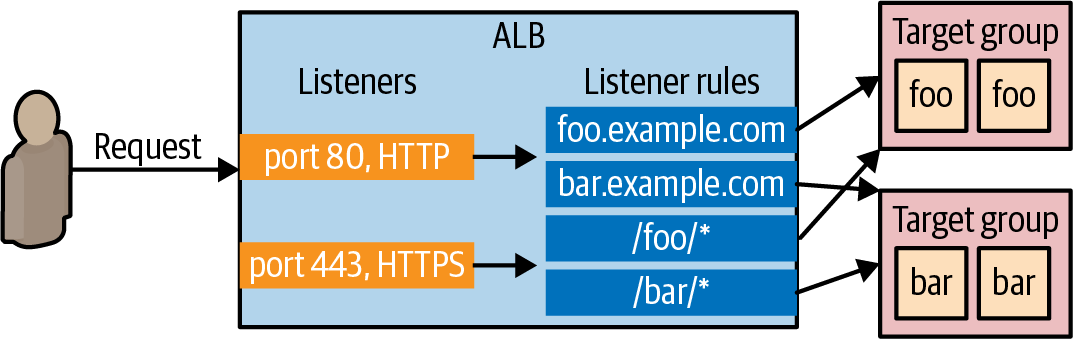

| VM orchestration. | Use OpenTofu to deploy an ASG and ALB. | |

| Container orchestration. | Deploy a Dockerized app in Kubernetes. | |

| Serverless orchestration. | Deploy a serverless app with AWS Lambda. | |

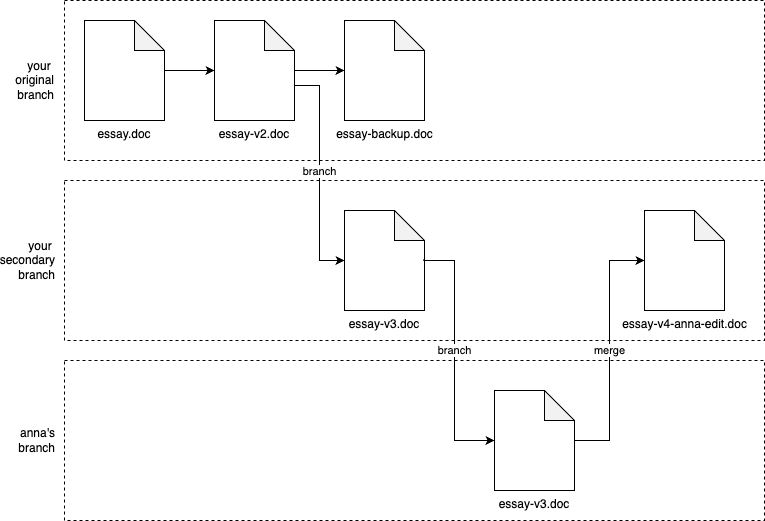

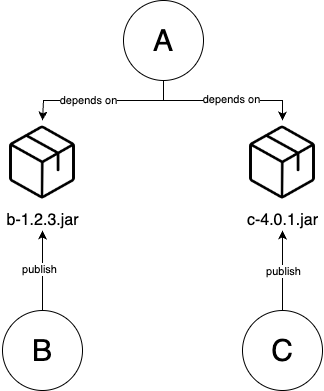

| 4. How to Version, Build & Test Your Code | Version control. | Store your code in GitHub and use PRs. |

| Build systems. | Configure your build in NPM. | |

| Dependency management. | ||

| Automated testing. | - Set up automated tests for a Node.js app. - Set up automated tests for OpenTofu code. | |

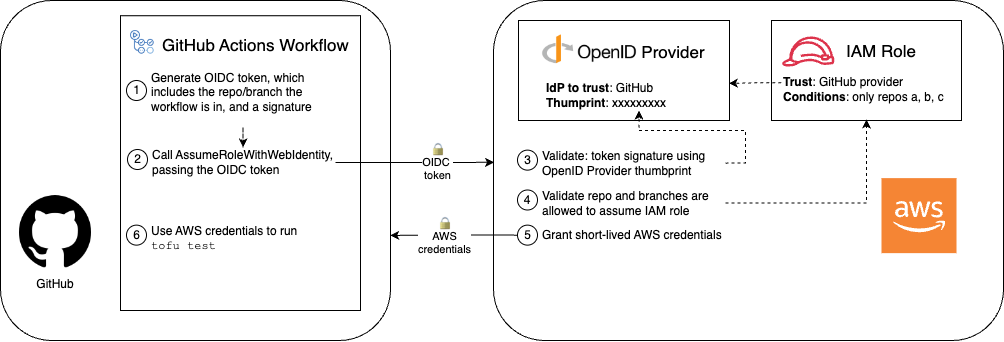

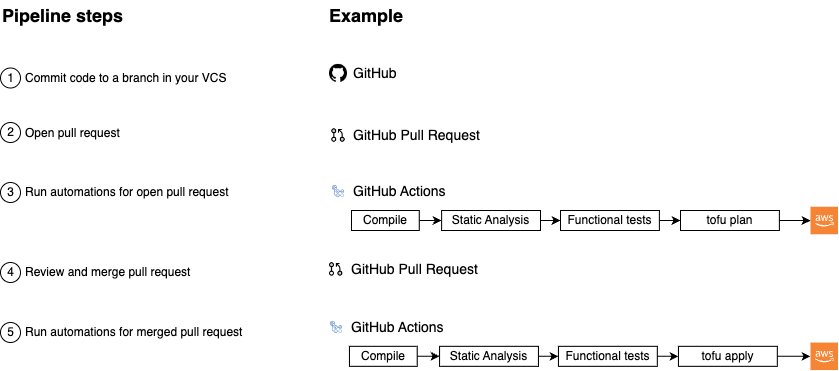

| 5. How to Set Up CI/CD | Trunk-based development. | - Use OIDC with GitHub Actions and AWS. - Run tests in GitHub Actions. |

| Branch by abstraction. | ||

| Feature toggles. | ||

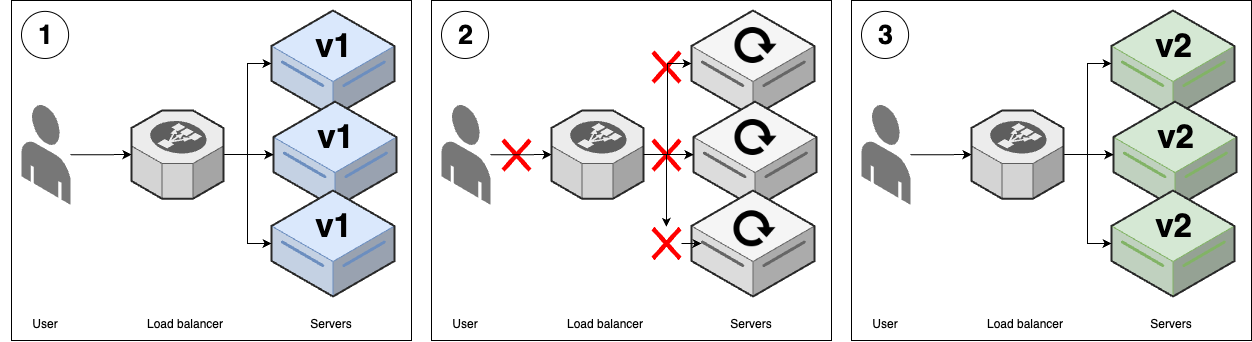

| Deployment strategies and pipelines. | Run deployments in GitHub Actions. | |

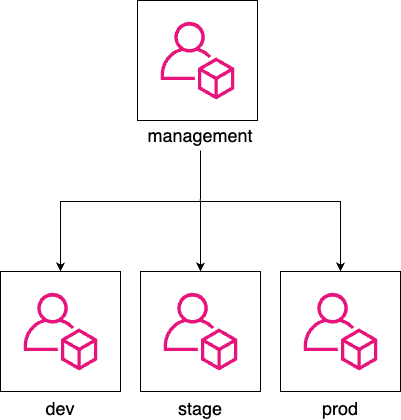

| 6. How to Work with Multiple Teams & Environments | Internal developer platforms. | Create multiple AWS accounts. |

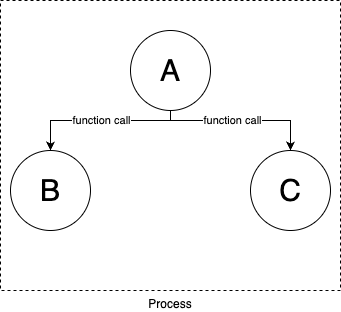

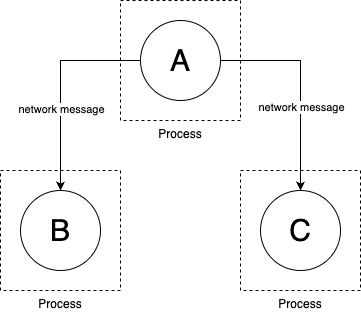

| Microservices. | Deploy microservices in Kubernetes. | |

| Updating and patching. | Configure automated updates. | |

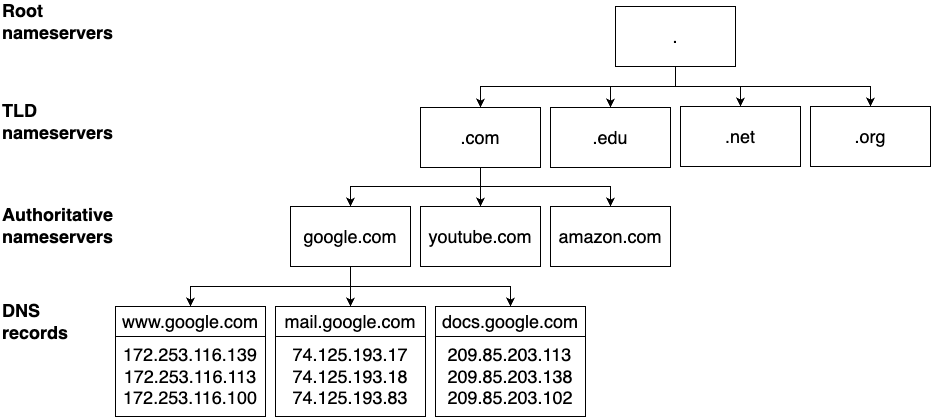

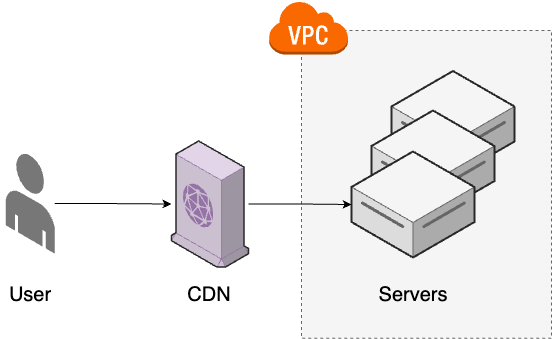

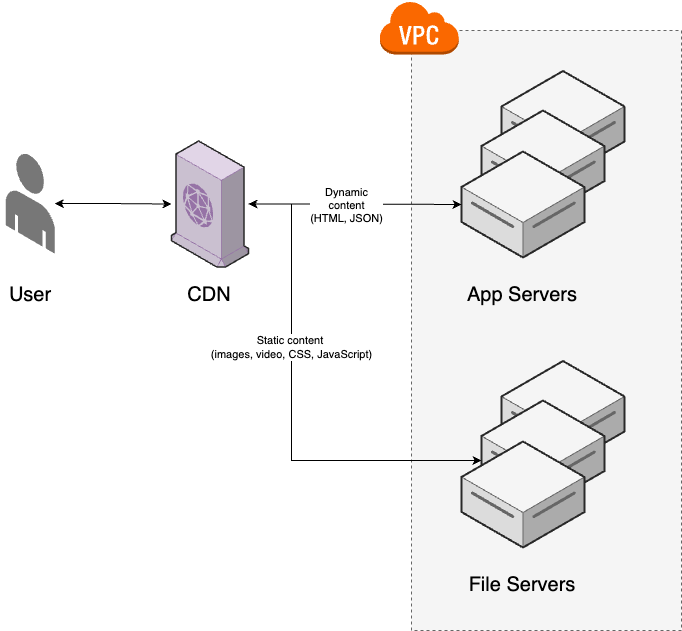

| 7. How to Set Up Networking: VPCs, VPN, and DNS | DNS, domain names, CDN. | Set up a custom domain name in Route 53. |

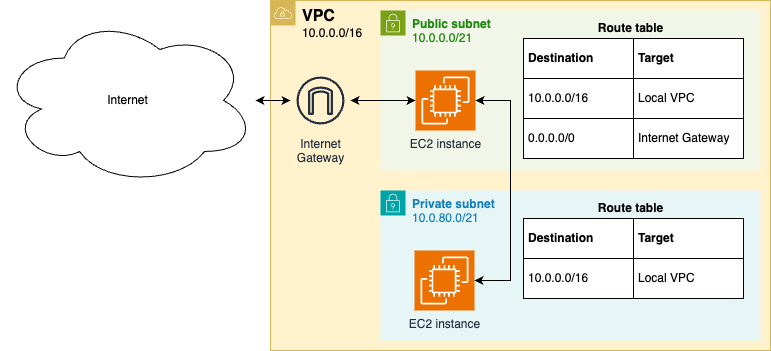

| Virtual private clouds (VPCs). | Deploy a custom VPC in AWS. | |

| Service discovery, service meshes. | Do service discovery with Kubernetes. | |

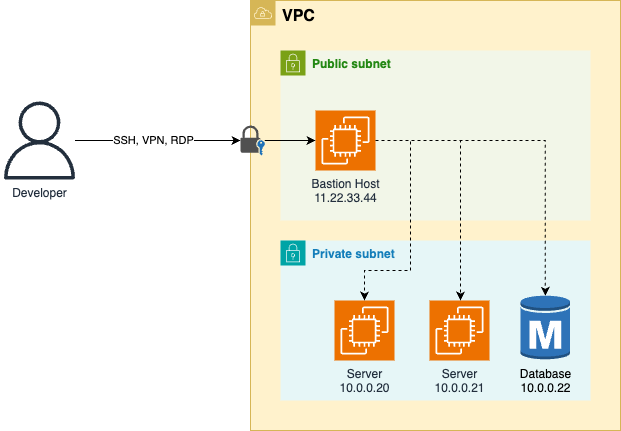

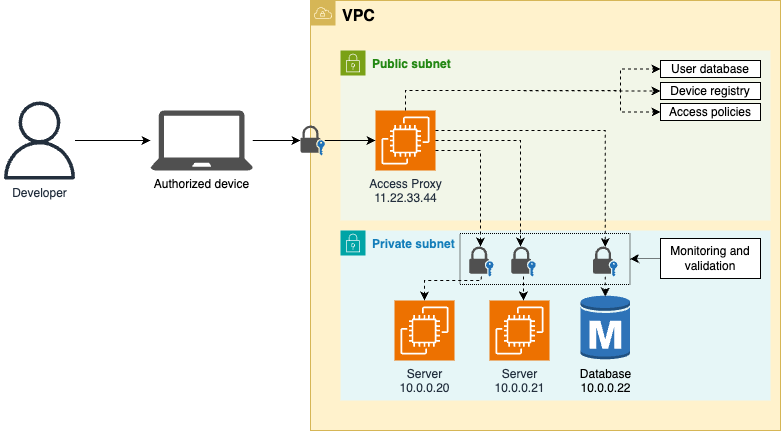

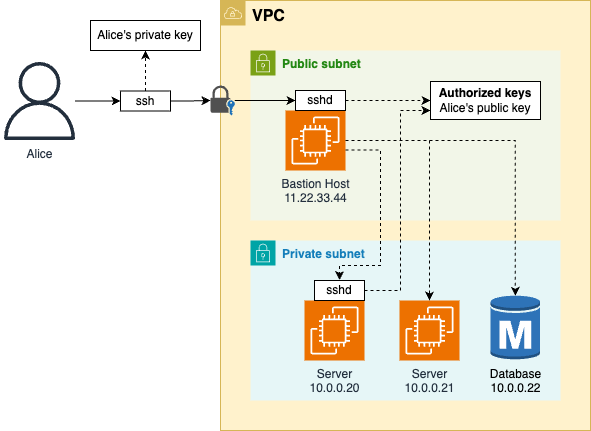

| Network access and hardening. | Use SSH and EC2 Instance Connect. | |

| 8. How to Manage Authentication, Authorization & Secrets | Authentication and user management. | |

| Authorization, permissions, ACLs. | Set up SSO and roles for AWS. | |

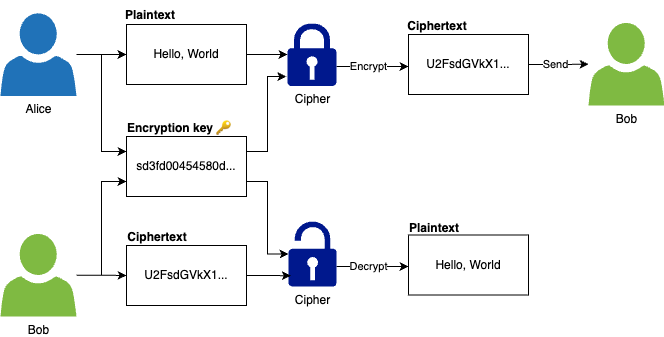

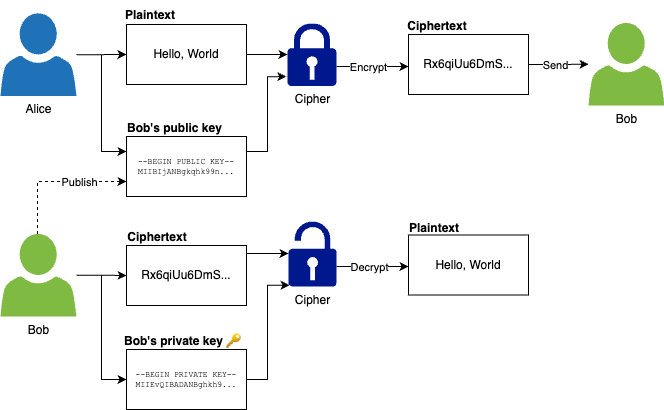

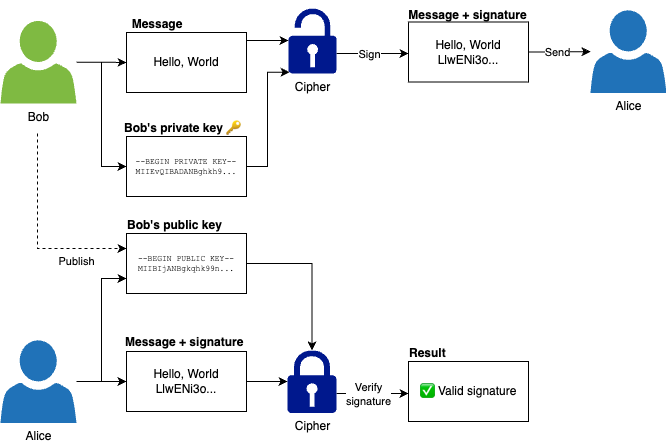

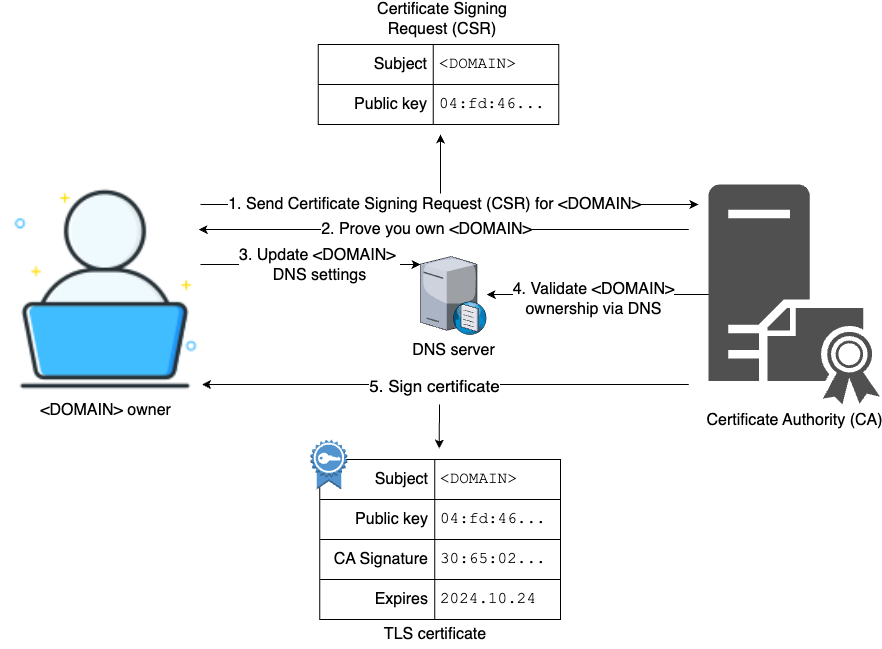

| Encryption at rest and in transit. | Use ACM to provision a TLS certificate. | |

| Secrets management. | Store secrets in AWS Secrets Manager. | |

| 9. How to Store Data: SQL, NoSQL, Queues, Warehouses, | Local and network drives. | |

| Relational DBs, schema management. | - Deploy PostgreSQL using RDS. - Deploy Redis using ElastiCache. - Use Flyway for schema migrations. | |

| NoSQL, queues, data warehouses. | ||

| File storage. | Use S3 and CloudFront for static assets. | |

| 10. How to Monitor Systems: Metrics, Logs, Alerts, and Observability | Metrics and dashboards. | Create a dashboard in Grafana. |

| Logs and log aggregation. | Aggregate logs in Elasticsearch. | |

| Alerts and on-call rotations. | Set up alerts in CloudWatch. | |

| Observability and tracing. | ||

| 11. The Future of DevOps and Software Delivery | Serverless. | |

| AI. | ||

| DevSecOps, shift left, supply chain. | ||

| Infrastructure from code, runbooks. |

What isn’t in this book?

| DevOps, software delivery’s topic | What isn’t in this book |

|---|---|

| DevOps culture & team dynamics | Cross-functional teams, high-trust environments, collaboration tools/techniques |

| Organization processes | Capacity, blameless postmortem, on-call rotation, KPIs, SLOs, error budgets… |

| Server hardening | OS permissions, intrusion protection, file integrity monitoring, sandboxing, hardened images… |

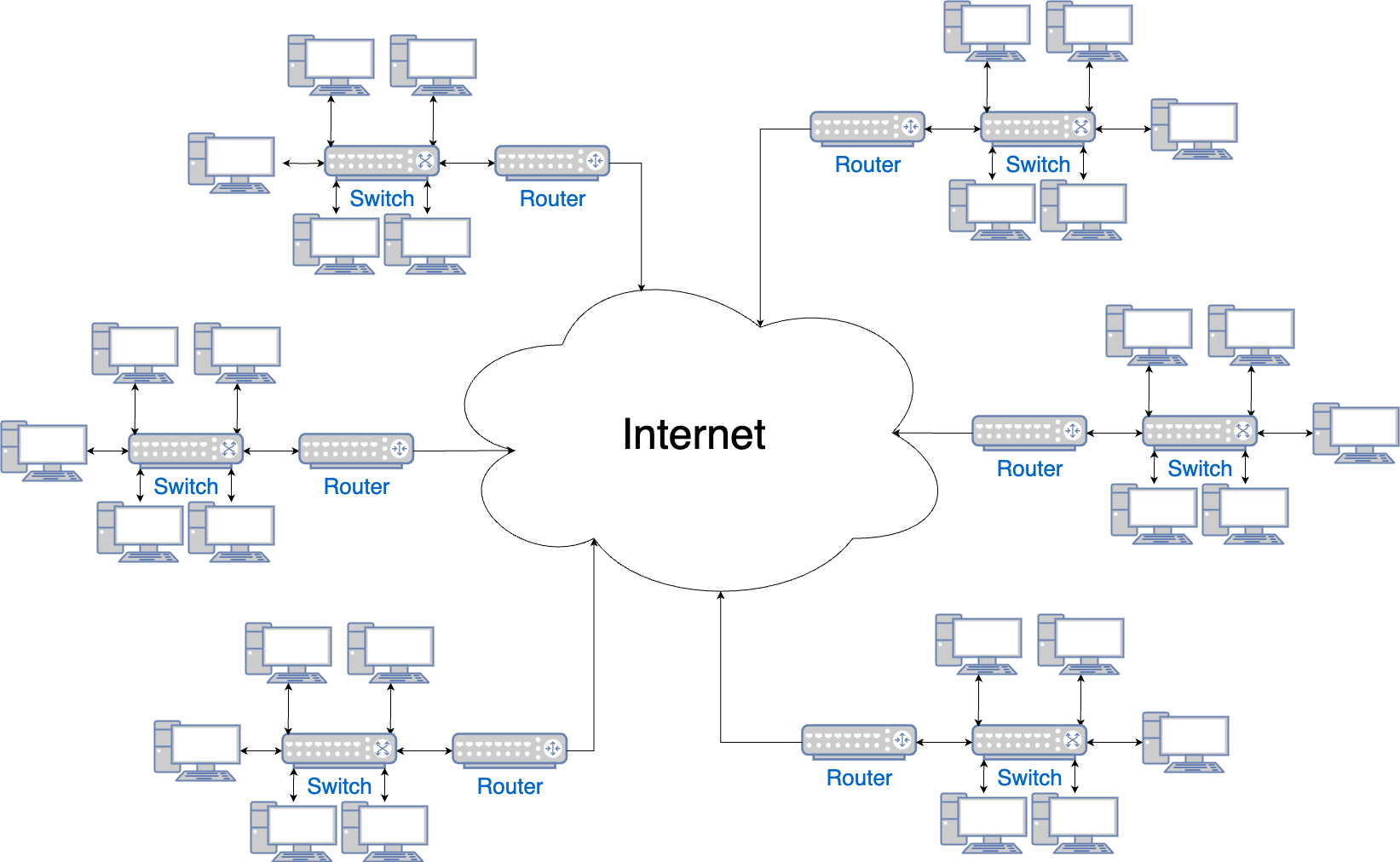

| Low-level networking | Routers, switches, links, routing protocols… |

| Compliance | A detail guide to meed any standard, e.g. SOC2, ISO 270001, HIPAA, GDPR… |

| Cost optimization & performance tuning | A detail guide to reduce costs & improve performance |

Code examples

-

This book includes many examples to work through, which is available at GitHub repository: https://github.com/brikis98/devops-book

-

The code samples are organized

- by chapter (e.g.

ch1,ch2),- and within each chapter, by tool (e.g.

ansible,kubernetes,tofu)

- and within each chapter, by tool (e.g.

- by chapter (e.g.

tip

The examples show what the code looks like at the end of a chapter.

To maximum the the learning:

- write the code yourself

- check the “official” solutions at the end

Opinionated Code Examples

The code examples represents just one opinionated way to implement this book core concepts - IaC, CI/CD…

important

In real world, there is no single “best” way that applies to all situations:

- All technology choices has a trade-off.

- Some solution maybe a better fit in some situations that others.

Always use your judgment to pick the right tool for the job.

note

The core concepts in this book only change & evolve over a long time span (5-10 years). But the code examples that implement these core concepts may change more frequently. e.g. Kubernetes has a release cycle of 4-month1.

You Have to Get Your Hands Dirty

This book will teach you principles, techniques, tools about DevOps & software delivery.

But you can only achieve serious results if you learn by doing:

-

re-create the example code yourself

- writing code

- running code

- make the code work

-

do the extra get your hands dirty section & tweak the examples

- customize to your needs

- break things

- figure out how to fix them

- …

Using Code Examples

The code examples in this book may be used

- in your programs and documentation (but not too much)

- but not for selling & distribution

https://kubernetes.io/releases/release/

Chapter 1: An Introduction to DevOps and Software Delivery

I wrote an app. Now what?.

Delivery it to users! But how?

- AWS, Azure or Google Cloud (Heroku, Vercel, Netlify)?

- One server or multiple servers?

- Docker? Kubernetes?

- VPC? VPN, SSH?

- Domain name? DNS, TLS?

- Backup database?

- Why the app crashed? How to debug it?

A Primer on DevOps

Why DevOps matters?

-

The gap between companies with world-class software delivery and everyone else is 10x, 100x or even more.

-

Dora’s software delivery metrics:

-

What is it?

… of software changes Metrics Description Throughput… 🚀⏱️ Lead time How long it takes a change to go from code committed to deployed in production? 🚀🔢 Deploy frequency How often does your organization deploy code to production? Stability… 🆘🚨 Change fail percentage How often deployments cause failures that need immediate remediation (e.g. hotfix, rollback) 🚧⏱️ Recovery time How long it takes to recover from a failed deployment? -

Performance according to 2023 State of DevOps Report

Metrics Low performers Elite performers World-class performers Elite vs low performers 🚀⏱️ Lead time Deployment processes takes… 36 hours 5 mins In minutes (100% automated) 10-200x more often 🚀🔢 Deploy frequency Deploying … Once/month Many/day Anytime (Thousands/day) 10-200x faster 🆘🚨 Change fail percentage The rate of deployment causing problems… 2/3 1/20 Detect in seconds (before user-visible impact) 13x lower 🚧⏱️ Recovery time Outages last 24 hours 2 mins In minutes (sometimes automated) 700-4000x faster

-

-

It’s possible to achieve the performance of the elite (or even the world-class):

- Each of these performers may do it a little differently

- But in common, most of these performers share a lot of best practices.

important

The DevOps movement is an attempt to capture some of the best practices from the world-class performers in DORA software delivery metrics.

Where DevOps Came From

Before DevOps

-

Building a software company …

-

write the software

- which is handled by the Developers - Dev team

… also means manage a lot of hardware:

-

setup cabinets, racks -> load with servers -> install wiring, cooling -> build redundancy power systems…

- which is handled by the Operations - Ops team

-

-

An application would be:

- built by the Dev team, then

- “tossed over the wall” to the Ops team

The Ops team had to figured out the software delivery:

-

how to deploy, run, maintain… it.

-

most was done manually:

- manage the hardware

- install the app & dependencies

-

The company eventually run into problems:

- release are manual, slow, error-prone

- frequent outages, downtime

The Ops team

- reduce the release cycle (because they can handle all these manually things)

- but each release is bigger, causing more problems

Teams begin blaming each other, silos form…

After Devops

-

Instead of managing their own hardware (or data-centers)

- many companies take advantage of cloud providers (e.g. AWS, Azure, Google Cloud)

- many Ops teams spend their time working on software - e.g. Terraform, Ansible, Docker, Kubernetes - to manage the hardware.

-

Both Dev & Ops teams spend most of their time working on software:

-

The distinction between the two team is blurring.

-

There may still a separation of responsibility …

- The Dev teams are responsible for the application code

- The Ops team are responsible for the operation code

-

…but both teams need to work more closely together…

-

-

There come the DevOps movement with the goal of

- making software delivery vastly more efficient

- (building better software faster)

by moving to the cloud & shifting to DevOps mindset:

Before After After Example 👥 Teams Devs write code, “toss it over the wall” to Ops Devs & Ops work together on cross-functional teams 🧮 Servers Dedicated physical servers Elastic virtual servers AWS’s EC2 🌐 Connectivity Static IPs Dynamic IPs, service discovery 🛡️ Security Physical, strong perimeter, high trust interior Virtual, end-to-end, zero trust ⚡ Infrastructure provisioning Manual Infrastructure as Code (IaC) tools Terraform 🔧 Server configuration Manual Configuration management tools Ansible ✅ Testing Manual Automated testing CI 🚀 Deployments Manual Automated CD 💱 Change process Change request tickets 🎫 Self-service 🏧 🔢🔄 Deploy cadence (Deploy frequency) Weeks or months Many times per day 🔢🔁 Change cadence (Lead time) Weeks or months Minutes -

DevOps movement has transformed a lot of companies:

- Nordstrom:

- number of features delivered by month increased 100%

- defects reduced 50%

- lead time reduced 60%

- number of production accidents reduced 60 - 90%

- HP’s LaserJet Firmware:

- the amount spent on developing features went from 5% to 40%

- development cost reduced 40%

- Etsy:

- From infrequent deployments to 25-50 deployments/day

- Nordstrom:

The Evolution of DevOps Software Architecture & Software Delivery Process

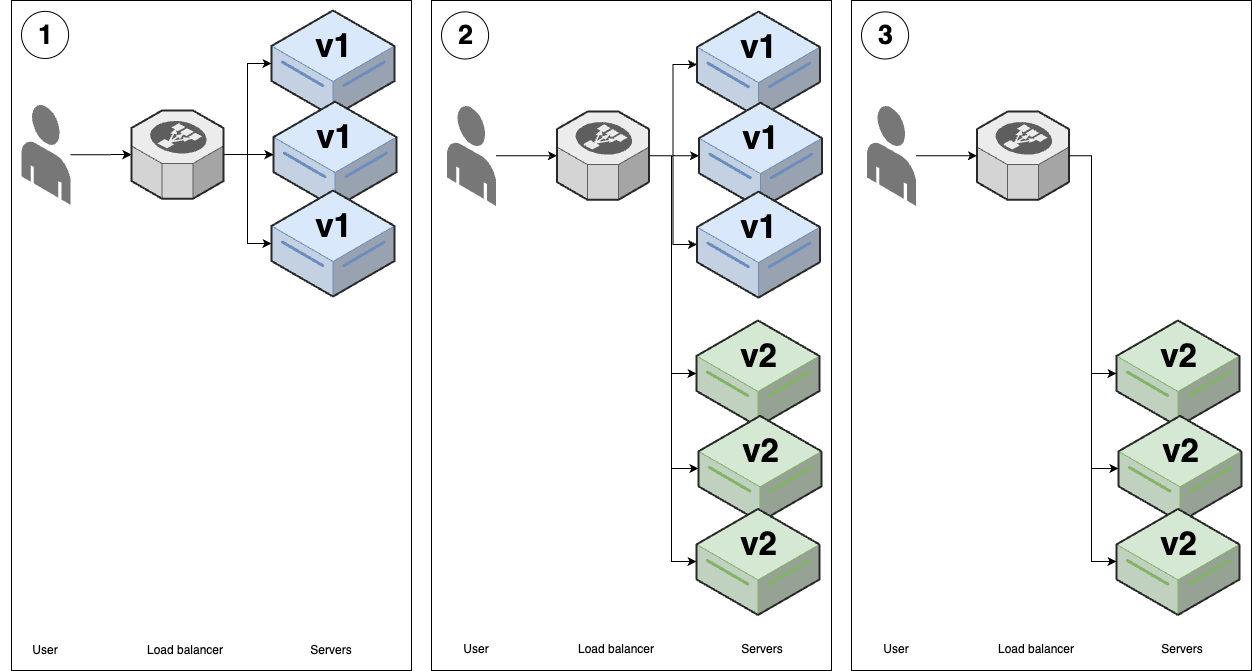

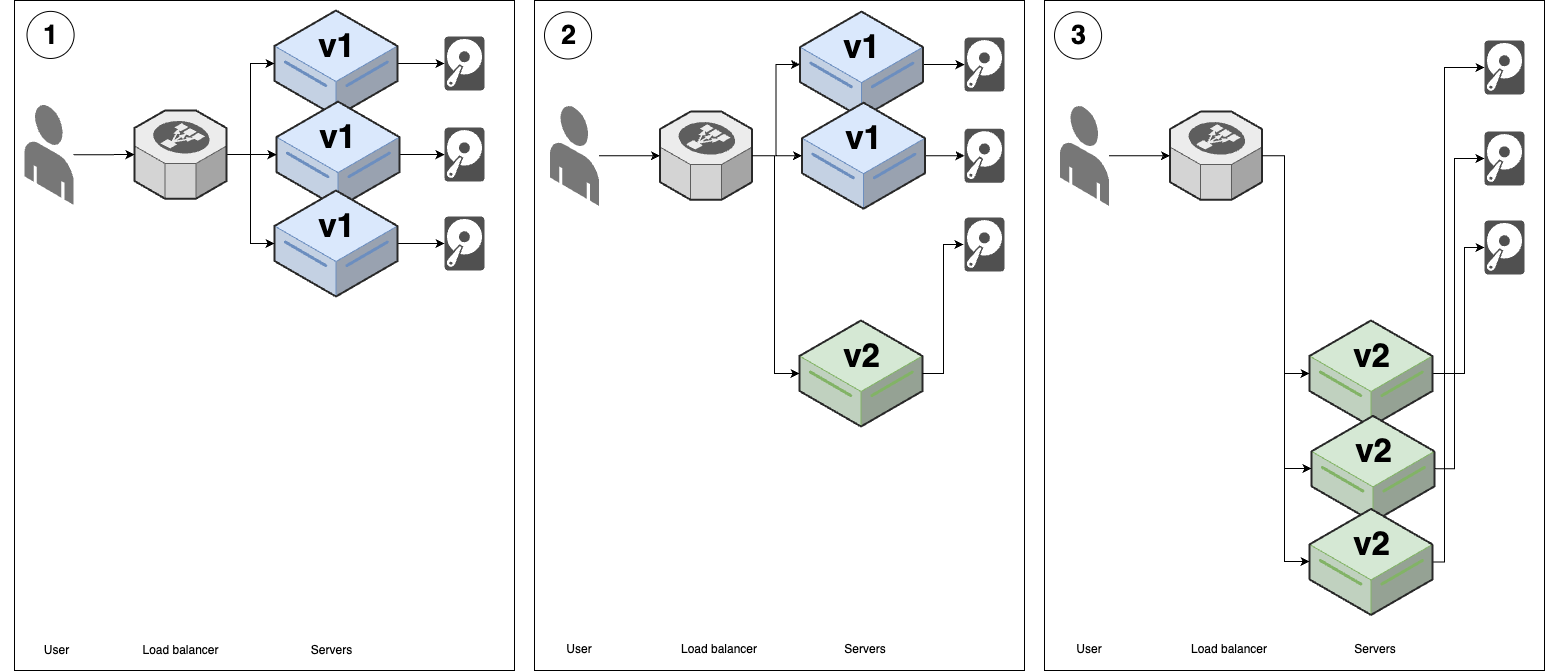

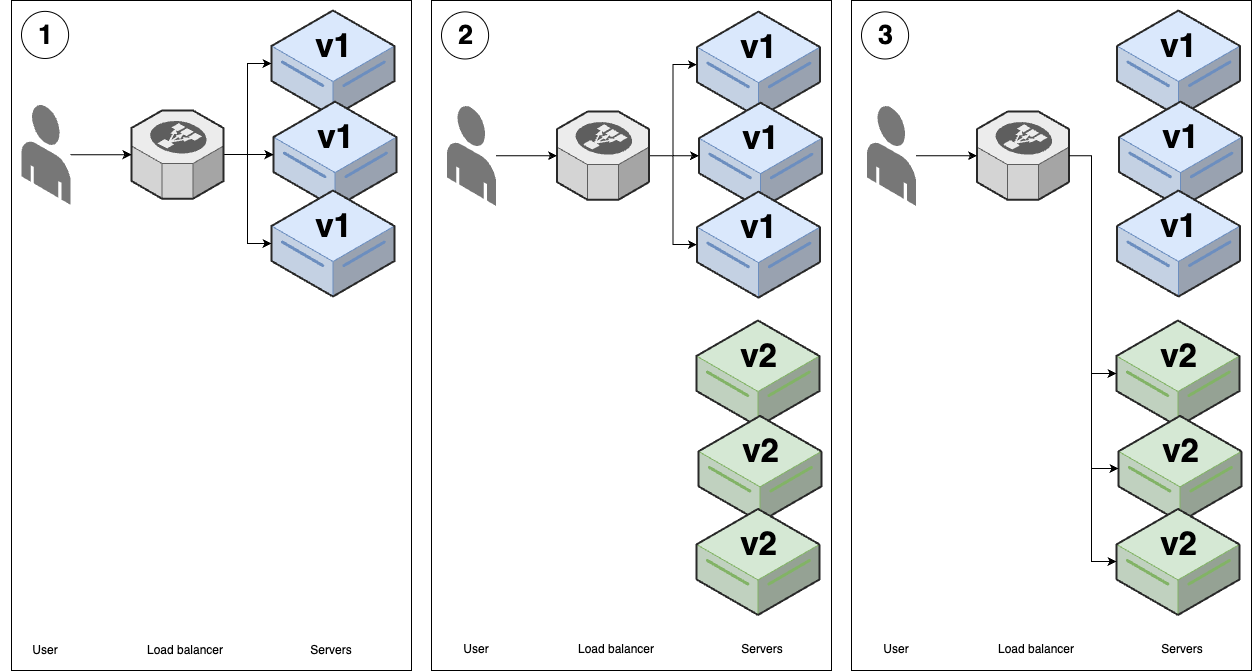

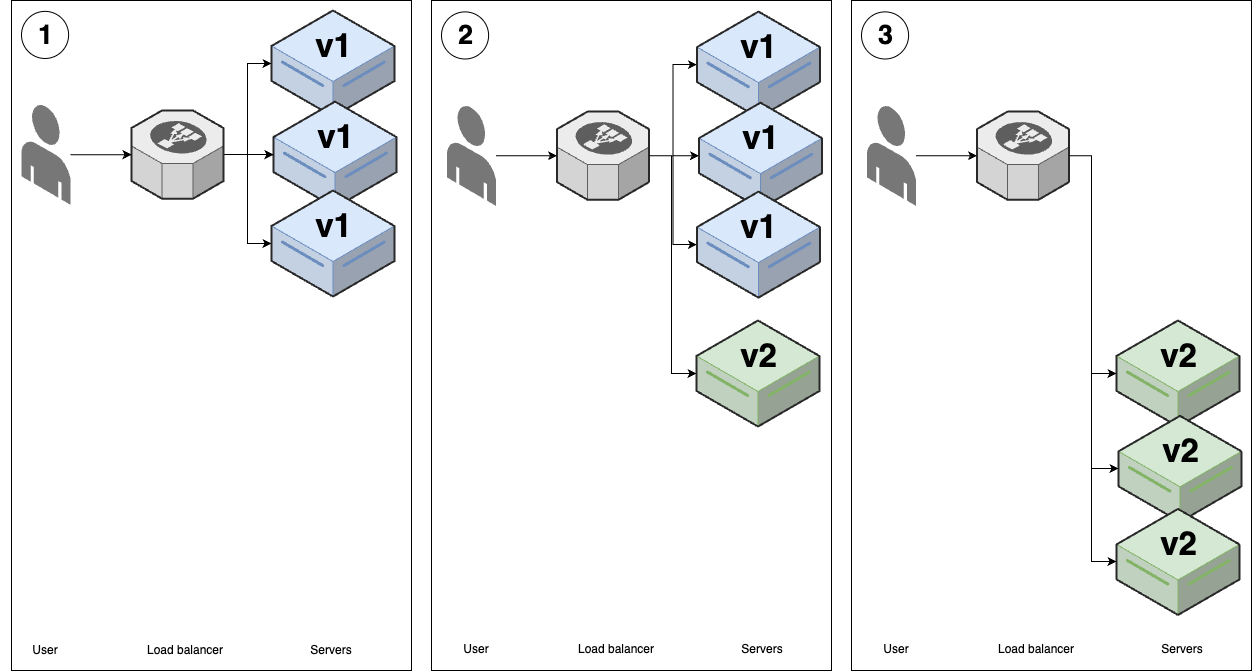

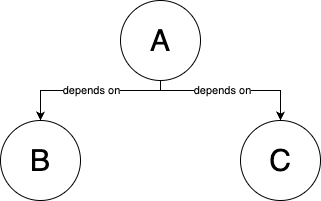

The architecture & software delivery process evolution can be broken down into:

- 3 high-level stages

- each stages consists of 3 steps

Stage 1

Stage 1 applies to most software projects start: new startups, new initiatives (at existing company), side projects.

-

Step 1:

- Single server: everything runs on a single server

- ClickOps (Process): manage infrastructure & deployment manually

User -> SERVER -

Step 2:

- Standalone database: database become a bottleneck -> break it to a separate server

- Version Control (Process): team grows -> collaborate & track changes

- Continuous Integration (Process): reduce bugs/outages -> automated tests

User -> Server -> DATABASE Developer -> VERSION + CONTINUOS CONTROL INTEGRATION -

Step 3:

- Multiple servers: a single server is not enough

- Load Balancing: distributed traffic across servers

- Networking: protect servers -> a private networks

- Data Management: scheduled backups, data migration

- Monitoring (Process): get better visibility of system

---- VPC ---------------------------- | BACKUPS | | SERVER ↑ | User -> | LOAD BALANCER -> SERVER -> Database | | SERVER | | ... | -------------------------------------- Developer -> Version + Continuos + MONITORING Control Integration

State 1 is

- simple

- fast to learn, easy to set up

- fun to work with

Most software projects never need to make it past stage 1.

note

If your application is so good and the number of users keep going - in other words, you have scaling problems - you may have to move on to the subsequent stages.

caution

Only move to the subsequent stages, if you’re facing problems that require more complex architecture & processes to solve.

- These complexity has a considerable cost.

- If you’re not facing these problems, then you can and should avoid that cost.

Stage 2

Stage 2 applies to larger, more established companies software that has larger user bases and more complexities.

-

Step 4:

- Caching for data stores: database is still a bottleneck -> add read replicas & caches

- Caching for static content: traffic grows -> add CDN for content that doesn’t change often

---- VPC ----------------------------------------- | Backups | | ↑ | User -> | CDN -> Load balancer -> Servers -> Database | | (CACHE) ↓ | | CACHE | -------------------------------------------------- Developer -> Version + Continuos + Monitoring Control Integration -

Step 5: team size become a problem, deployment is slow, unreliable

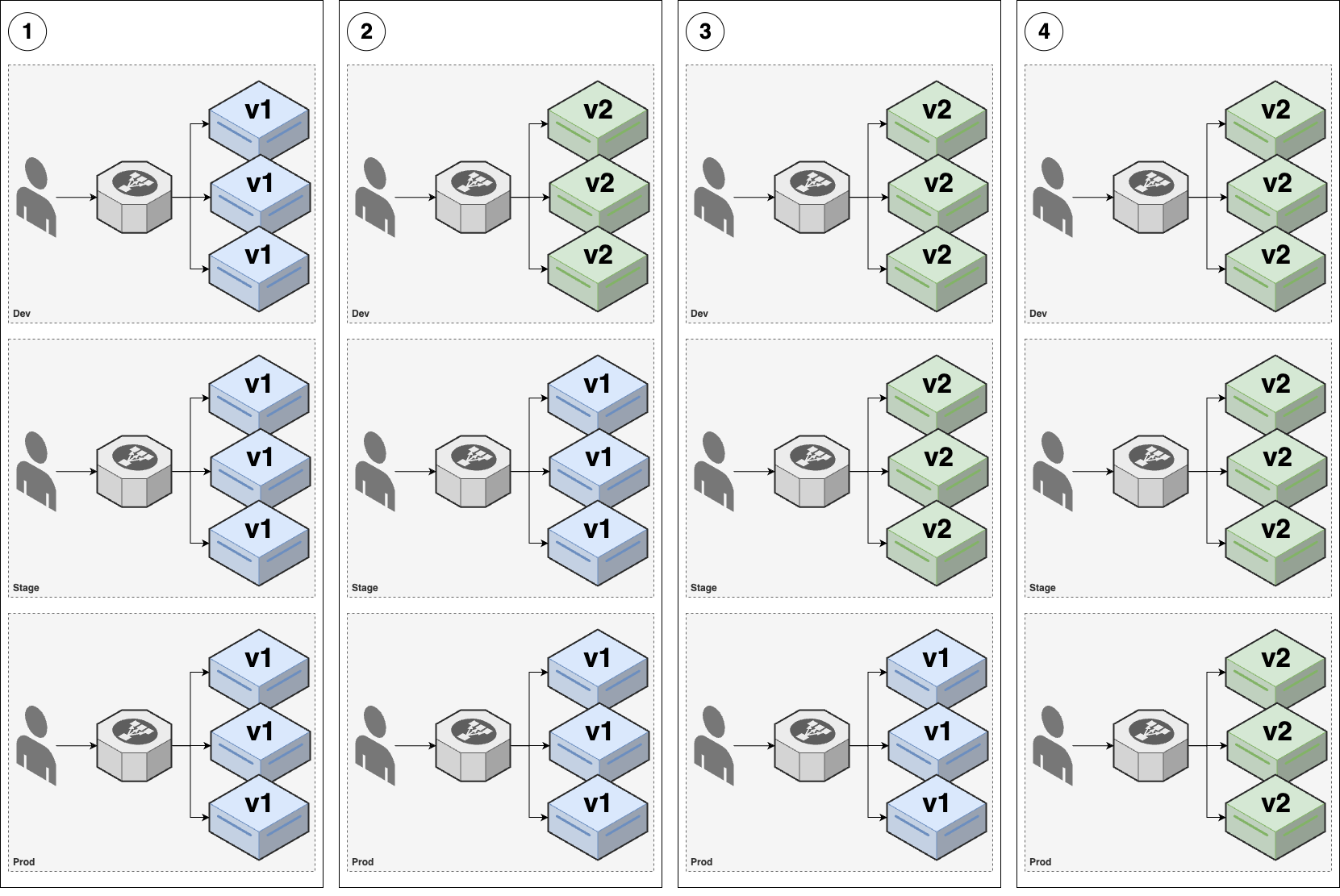

- Multiple environments: to help teams do better testing. Each env is a full copy of infrastructure, e.g. dev, stage, prod

- Continuous delivery (Process): fast/reliable deployment -> deployment pipeline

- Authentication & secrets (Process): a little security

---- VPC ------------------------------------- _ | Backup | |_ | ↑ | | | User -> | CDN -> Load balancer -> Servers --> Database | | | | ↓ | | | |PROD Cache | | | ---------------------------------------------- | | |STAGE | | ---------------------------------------------- | |DEV | ---------------------------------------------- Developer -> Version + Continuos + CONTINUOS + Monitoring + AUTH, Control Integration DELIVERY SECRETS -

Step 6: teams keep growing to keep moving quick

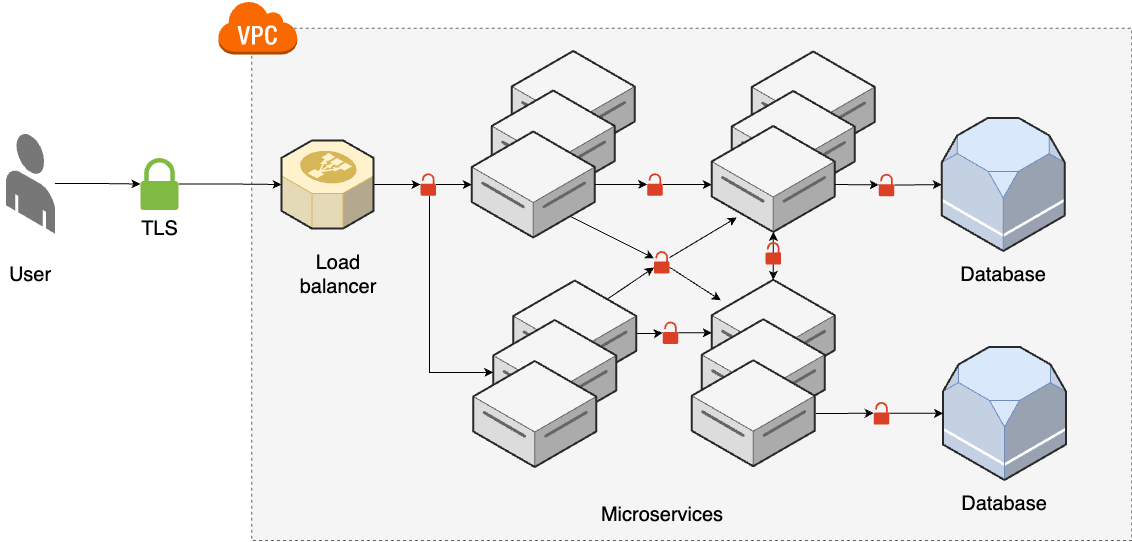

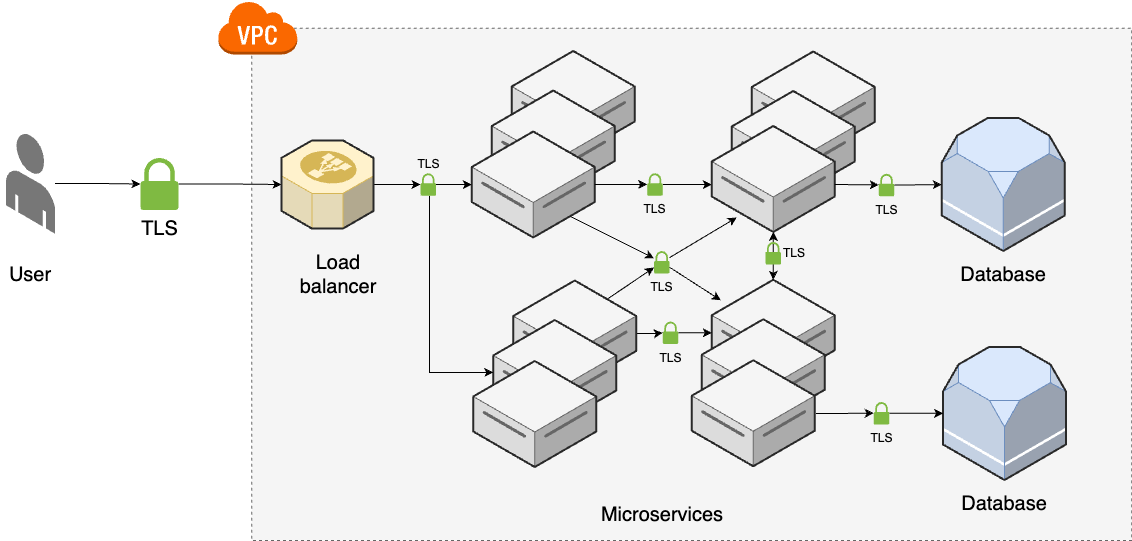

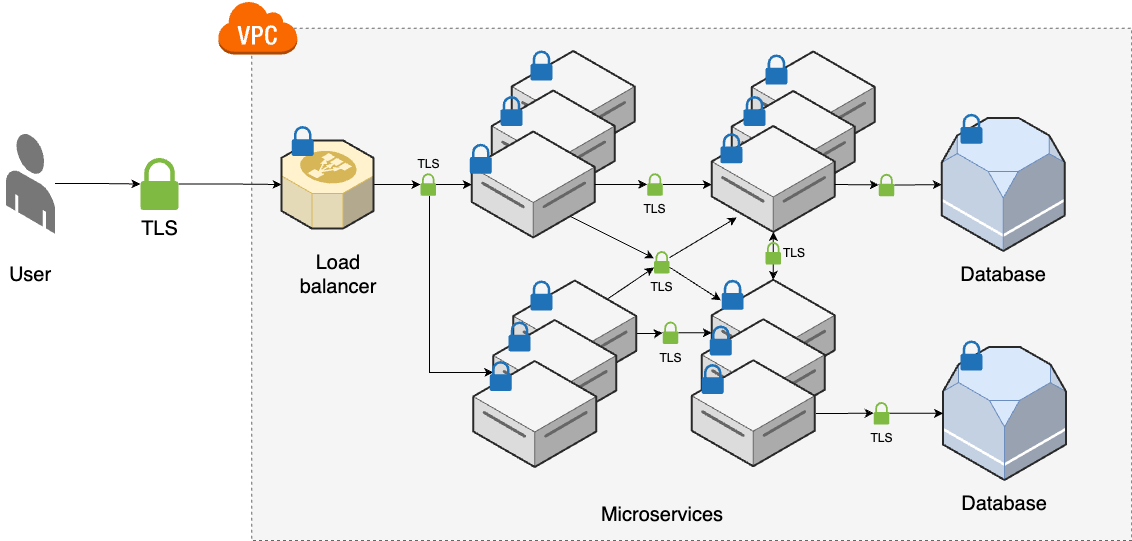

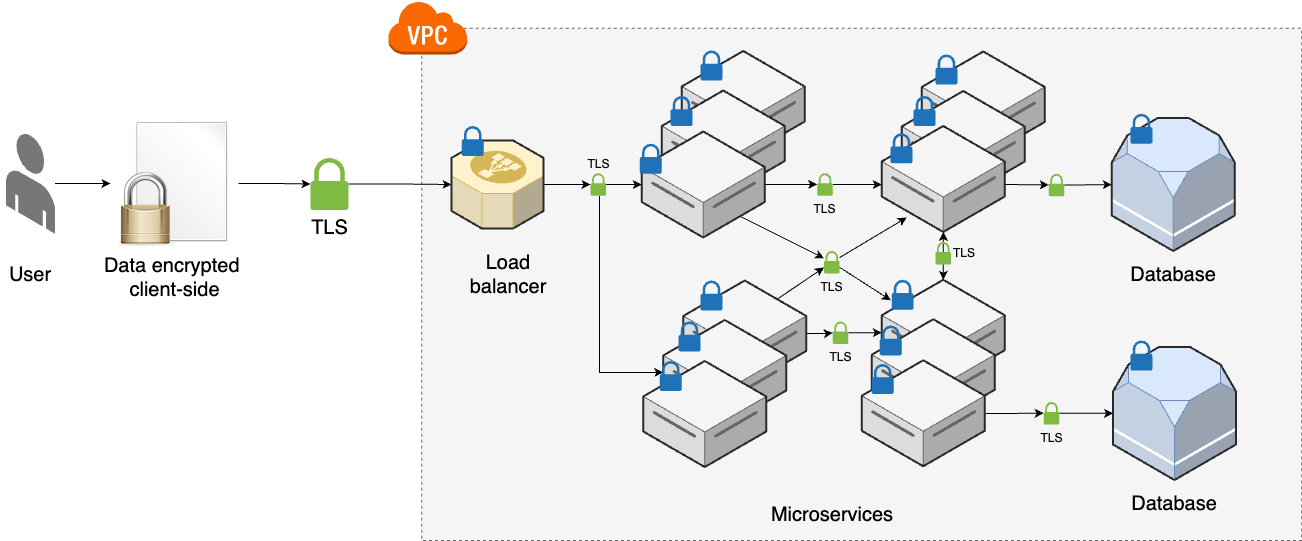

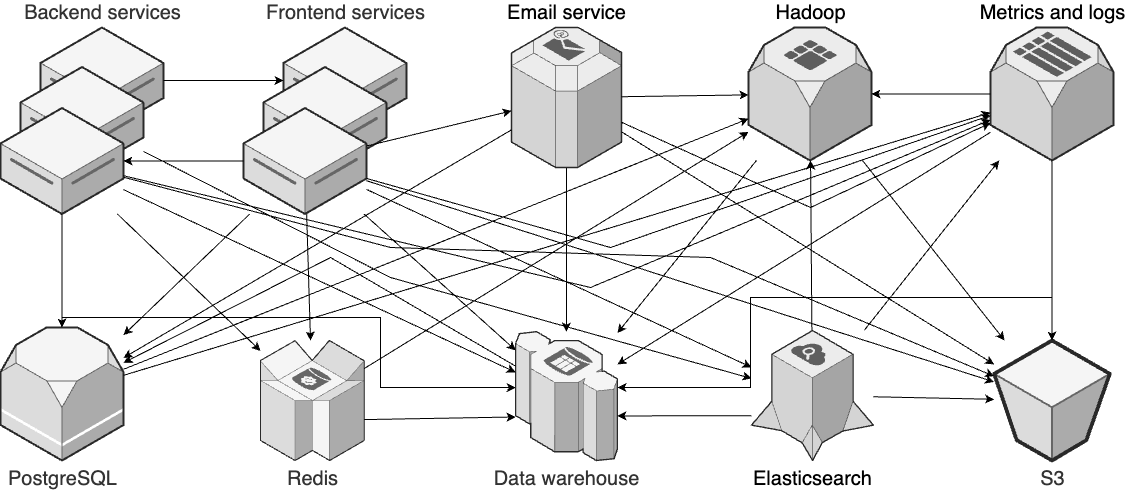

- Microservices: allow teams work independently, each microservice comes with its own data store & caches.

- Infrastructure as Code (Process): infrastructure for all microservices is a too much to be managed manually.

---- VPC ---------------------------------------------------- _ | Cache Backups | |_ | ↑ ↑ | | | | ------> SERVICES <-> SERVICES --> Database | | | | | ↕ ↕ ↕ | | | User -> | CDN -> Load balancer -> SERVICES <-> SERVICES --> Database | | | | ↓ ↓ | | | |prod Cache Backups | | | ------------------------------------------------------------- | | |stage | | ------------------------------------------------------------- | |dev | ------------------------------------------------------------- Developer -> Version + Continuos + Continuos + Monitoring + Auth, + INFRASTRUCTURE Control Integration Delivery Secrets AS CODE

Stage 2 represent a significant step up in terms of complexity:

- The architecture has more moving parts

- The processes are more complicated

- The need of a dedicated infrastructure team to manage all of this.

Stage 3

Stage 3 applies to large enterprises with massive user bases.

-

Step 7: massive user bases

- Observability: More visibility <- Tracing + observability

- Service discovery: So many microservices, how to communicate with each other?

- Server & networking hardening -> Compliance standard, e.g. PCI, NIST, CIS

- Service mesh: Unified solution for manage microservices -> all items about + load balancing + traffic control, error handling

---- VPC ---------------------------------------------------------------- _ | Cache Backups | |_ | ---------------------------------- ↑ ↑ | | | | | Services <--> Services-----|------> Database | | | | | | | | | | | OBSERVABILITY | | | | | | | | | | | | | | | | User -> | CDN -> Load -> | ↕ SERVICE ↕ | | | | | balancer | DISCOVERY | | | | | | | | | | | | | | | | | | HARDENING | | | | | | | | | | | | Services <--> Services-----|------> Database | | | | --------------------------------- ↓ ↓ | | | |prod SERVICE MESH Cache Backups | | | ------------------------------------------------------------------------- | | |stage | | ------------------------------------------------------------------------- | |dev | ------------------------------------------------------------------------- Developer -> Version + Continuos + Continuos + Monitoring + Auth, + Infrastructure Control Integration Delivery Secrets as Code -

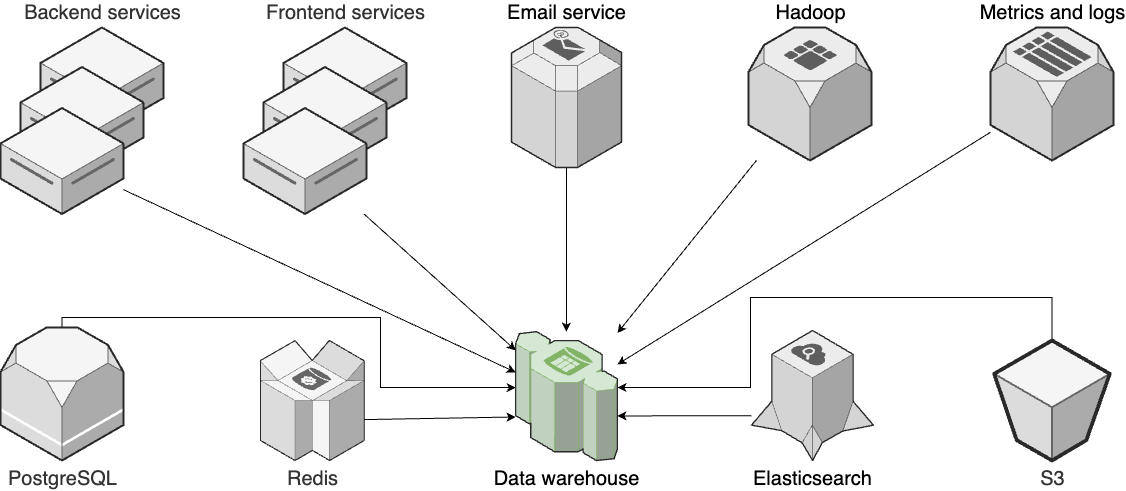

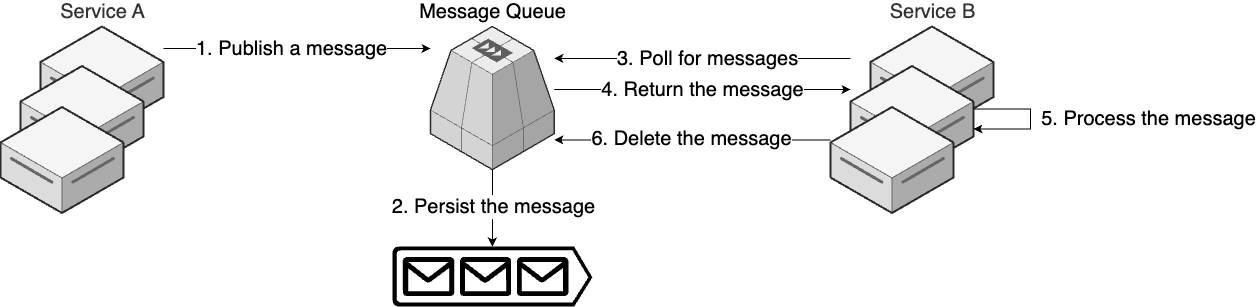

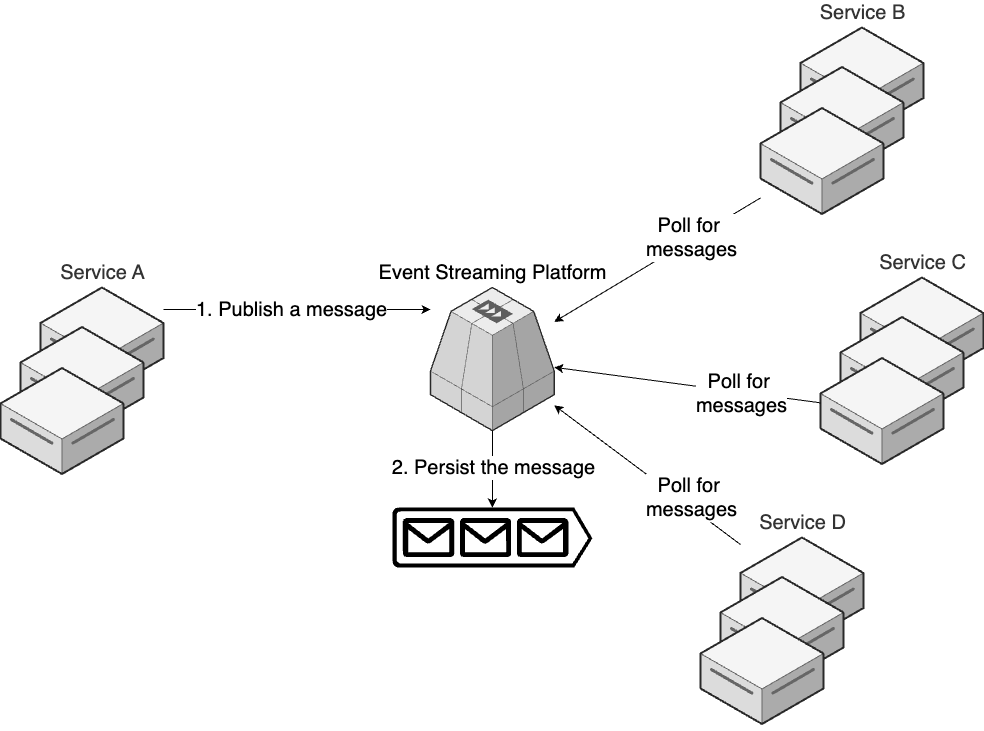

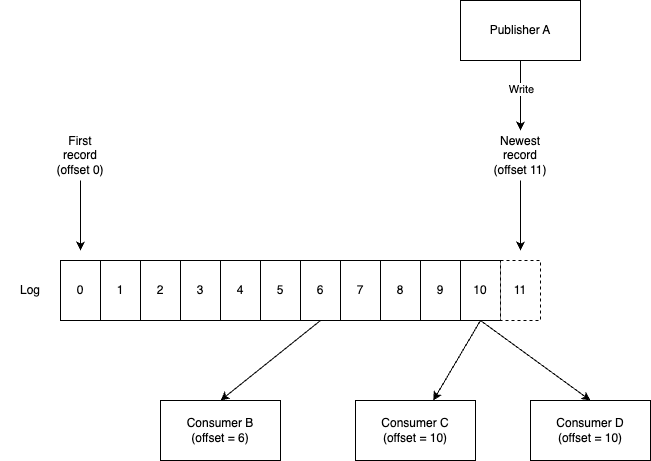

Step 8: a lot of data from users

- Analytics tools: process & analyze data <- data warehouse/lake, machine learning platforms…

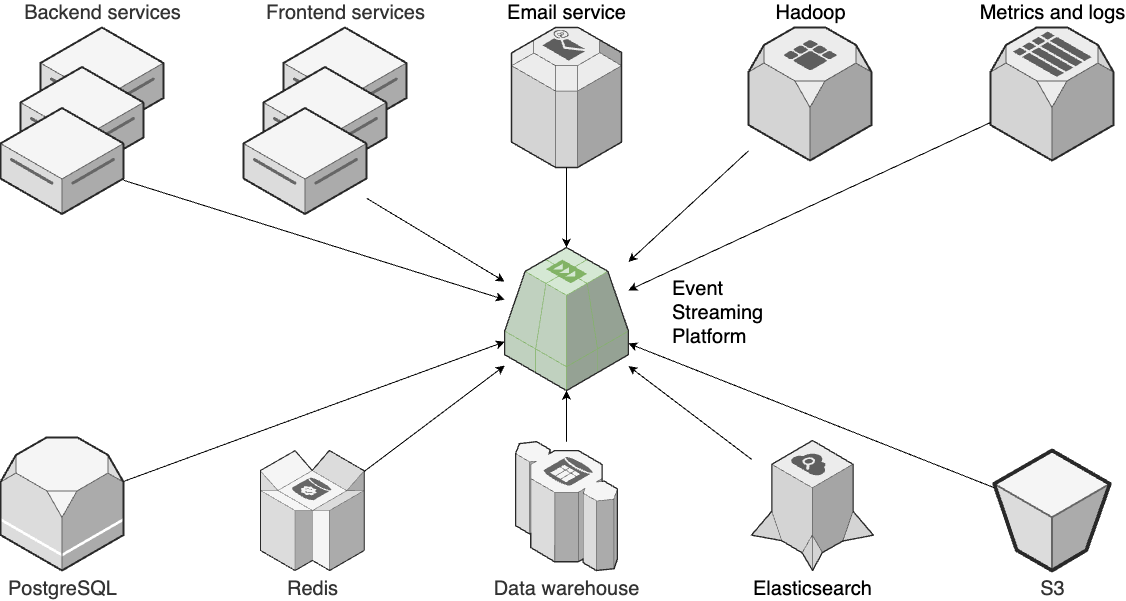

- Event bus: more microservices, more data -> event bus -> event-driven architecture

- Feature toggles & canary deployment (Process): deploy faster, more reliable <- advanced deployment strategies

---- VPC -------------------------------------------------------------------------- _ | Cache Backups | |_ | ---------------------------------- ↑ ↑ | | | | | Services <--> Services-----|------> Database ---- | | | | | | | | | | | | Observability | | | | | | | | | | | | | | | ↓ | | | User -> | CDN -> Load -> | ↕ Service ↕ | DATA | | | | balancer | Discovery | WAREHOUSE | | | | | | ↑ | | | | | | | | | | | | | | Hardening | | | | | | | | | | | | | | | | Services <--> Services-----|------> Database ---- | | | | | --------------------------------- ↓ | ↓ | | | | | | Service Mesh | Cache | Backups | | | | | ↓ ↓ ↓ ↓ ↓ | | | | EVENT BUS ======================================================= | | | |prod | | | ----------------------------------------------------------------------------------- | | |stage | | ----------------------------------------------------------------------------------- | |dev | ----------------------------------------------------------------------------------- Developer -> Version + Continuos + Continuos + Monitoring + Auth, + Infrastructure + FEATURE + CANARY Control Integration Delivery Secrets as Code TOGGLE DEPLOYMENT -

Step 9:

- Multiple data centers: -> global user base

- Multiple accounts: larger employee base -> isolate teams/products

- Advanced networking: connect data centers, accounts

- Internal developer platform (Process): boost developer productivity; ensure all accounts are secure <- account baseline/factory

----> DATA (With all the infrastructure as in data center 1) | CENTER 2 | | | | ---- VPC -------------------------------------------------------------------------- _ | | | Cache Backups | |_ | ADVANCED | ---------------------------------- ↑ ↑ | | | | NETWORKING| | Services <--> Services-----|------> Database ---- | | | | | | | | | | | | | | | | Observability | | | | | | | | | | | | | | | | | | | ↓ | | | User -> DATA | CDN -> Load -> | ↕ Service ↕ | Data | | | CENTER 1| balancer | Discovery | Warehouse | | | | | | ↑ | | | | | | | | | | | | | | Hardening | | | | | | | | | | | | | | | | Services <--> Services-----|------> Database ---- | | | | | --------------------------------- ↓ | ↓ | | | | | | Service Mesh | Cache | Backups | | | | | ↓ ↓ ↓ ↓ ↓ | | | | Event Bus ======================================================= | | | |prod | | | ----------------------------------------------------------------------------------- | | |stage | | ----------------------------------------------------------------------------------- | |dev | ----------------------------------------------------------------------------------- Developer -> Version + Continuos + Continuos + Monitoring + Auth, + Infrastructure + Feature + Canary + Developer Control Integration Delivery Secrets as Code Toggle Deployment Platform

Stage 3 applies for company with the toughest problems that deal with the more complexity: global deployments, thousands of developers, millions of users.

note

The architecture in stage 3 is still a simplification to what the top 0.1% of the companies face.

Adopting DevOps Practices

Which DevOps practices to adopt?

important

KEY TAKEAWAY #1.1 You should adopt the architecture & software delivery processes that are appropriate for the stage of your company

caution

Don’t immediately jump to the end and use the architecture & processes of the largest, most elite companies:

- You don’t have the same scale

- You don’t have the same problems to solve

Their solutions may not be a good fit for you.

How to adopt DevOps practices?

The key to a success of adopting DevOps (or any migration project) is to do it incrementally:

-

Split up the work in a way that every step brings its own value, even if the later steps never happen

-

Don’t fall into false incrementalism where all steps need to be completed before any step can bring value.

There is a big changes that the projects gets:

- modified

- paused or even cancelled

important

KEY TAKEAWAY #1.2 Adopt DevOps incrementally, as a series of small steps, where each step is valuable by itself.

caution

Avoid “big bang” migration (all or nothing).

tip

Focus on solving small, concrete problem one at a time.

e.g.

- Migrate to cloud:

- Instead of migrating all teams at the same time

- Identifying one small, specific app/team -> Migrate just that app/team

- Adopt DevOps:

- Instead of applying all processes

- Identifying one small problem, e.g. outages during deployment -> Automate the deployment steps

Even if the larger migration doesn’t work, at least

- one team is more successful

- one process works better

An Introduction to Deploying Apps

Run an App Locally

Example: Run the Sample App Locally

-

A Node.js “Hello, World” - a web server

// app.js const { createServer } = require("node:http"); const server = createServer((req, res) => { res.writeHead(200, { "Content-Type": "text/plain" }); res.end("Hello, World!"); }); const port = 8080; server.listen(port, () => { console.log(`Server listening on port ${port}`); }); -

Install Node.js (if you haven’t installed)

-

Run the app

node app.js -

Open link http://localhost:8080 in browser

note

By default, when you run a web server on your computer:

- It’s only available on localhost.

- In other words, the web server can only be accessed from your computer.

tip

The localhost is a hostname - configured on every computer - points back to the loopback network interface (which is typically 127.0.0.1)

The problem with expose an app run on your personal computer

-

Security

Your personal computer (PC) is not hardened:

- There’s a lot of app installed. The more apps running, the more likely an app has an CVE that could be exploited by attacker.

- There is your personal data (documents, photos, videos, passwords…)

-

Availability

Your PC might:

- be accidentally shutdown.

- not be designed to run 24/7.

-

Performance

If you’re using your PC,

- that might take away system resources from your app,

- which might cause performance issues for your users.

- that might take away system resources from your app,

-

Collaboration

If your app has a bug, or needs to be updated:

- someone (coworkers, collaborators…) needs to access to your PC,

- should you give them access to your personal data? No!

important

KEY TAKEAWAY #1.3 You should never expose apps running on a personal computer to the outside world.

When to expose an app that runs on your PC

You can deploy an app locally, and expose that app only when:

- You’re exposing it to a trusted 3rd-party, (e.g. a coworker)…

- … to get feedback

tip

You can use tunnelling tools, e.g. localtunnel, ngrok, btunnel, localhost.run

- to get a temporary URL of your app

Then give someone you trust that URL to access your app.

Why many businesses still expose their critical apps from a PC

Maybe because of:

- The company has resource constrained, e.g. a tiny startup

- The person running the app doesn’t know any better

- The software delivery process is so slow, cumbersome; sneaking the app in a personal computer is the quickly way to get it running.

The solutions:

-

For 1, it’s the cloud.

-

For 2 & 3, it’s reading this book:

- You know better (2)

- You know how to create a software delivery process that allow your team to quickly & easily run their apps the right way: on a server. (3)

Deploying an App on a Server

There are 2 ways to get access to servers:

- On prem: Buying & setting up your own servers, e.g. Dell R7625 Rack Server[^1]1

- In the cloud: You rent servers from others, e.g. AWS EC2

Deploying On Prem Versus in the Cloud

on-prem : Abbreviated for on-premises software : Software you run: : - on your own servers : - in a physical location you own: e.g. your garage/office/data center

in the cloud : Software you run: : - on servers in a cloud computing platform, e.g. AWS, Azure : In other words, you rent servers from a cloud platform via a software interface, and use these rented servers to run your software.

When to Go with the Cloud

Using the cloud should be the default chose because of the following advantages:

| Advantage | Explain |

|---|---|

| Pay-as-you-go | - No up-front cost for hardwares - Extremely cheap or even free in early days |

| Maintenance & expertise | - No need to maintain hardwares - or hiring experts to do that |

| Speed | - No need to wait for the hardwares: order, ship, assemble… |

| Elasticity | - No need to plan long in advance |

| Managed services | - No need to maintain your own primitive services: databases, load balancers, storages, networking… |

| Security | - The cloud is secure by designed, with a huge amount of resources (time, expertise) |

| Global reach | - The cloud has data centers on over the world - By using the cloud, your team can be anywhere in the world |

| Scale | - The cloud is massive & growing at incredible rate |

important

KEY TAKEAWAY #1.4 Using the cloud should be your default choice for most new deployments these days.

When to Go with On-Prem

| On-prem makes sense when… | Explain | Note |

|---|---|---|

| You already have an on-prem presence | - If it ain’t broke, don’t fix it | |

| - The cost of a migration to the cloud might outweigh the benefit | ||

| You have load patterns that are a better fit for on-prem | - For some load patterns, cloud provides might charge a lot of mony, e.g. bandwidth & disk-space usage | Don’t forget the cost of maintenance for the hardware |

| - Your traffic is huge, but steady & predictable and you can afford your own servers. | ||

| - You need access to some hardware that’s not available in the cloud, e.g. CPUs, GPUs… | ||

| Compliance | - Some compliance standards, regulations, laws… have not yet adapted to the cloud |

When to Go with Hybrid

hybrid : a mixture of cloud & on-prem

The most uses cases of hybrid cloud:

| Use case | Explain |

|---|---|

| Partial cloud migration | - New apps are deployed to the cloud |

| - Some apps are migrated to the cloud | |

| - The rest are kept on-prem | |

| Right tool for the right job | You have both type of load pattern: |

| - An app has traffic spikes on holidays -> Cloud | |

| - Another app uses lots of disk space & bandwidth -> On-prem |

Two types of cloud: PaaS and IaaS

There are 2 types of cloud:

-

IaaS - Infrastructure as a Service

IaaS gives you access directly to the low-level primitives computing resources, e.g. servers, so

- you can create your own software delivery process.

e.g. Amazon AWS, Microsoft Azure, Google Cloud

-

PaaS - Platform as a Service

PaaS gives you a full, opinionated software delivery process.

e.g. Heroku, Netlify, Fly.io, Vercel, Firebase, Render, Railway, Platform.sh

tip

One of the first service from AWS (the first cloud that came out in 2006) is Elastic Compute Cloud (EC2), which allow you to rent servers from AWS.

This is the first Infrastructure as a Service (IaaS) in the market.

EC2 gives you access directly to the (low-level) primitive computing resources - the server.

tip

A year later, in 2007, Heroku came out with one of the first Platform as a Service (PaaS) offerings, which focus on high-level primitive.

In additional to the infrastructure, e.g. server, Heroku also provides a full, opinionated software delivery process:

- application packaging

- deployment pipelines

- database management

- …

Deploying An App Using PaaS

note

The examples in this chapter use Fly.io as the PaaS

tip

Why Fly.io?

- Provides $5 free credits -> the example can be running without cost anything.

- Support automatically packaging code for deployment via Buildpacks -> code can be deployed without any build system, Docker image…

- Has a CLI tool

flyctl-> deploy code straight from your computer.

Example: Deploying an app using Fly.io

-

Step 1: Install

flyctl -

Step 2: Sign up & sign in

fly auth signup fly auth login -

Step 3: Configure the build

# examples/ch1/sample-app/fly.toml [build] builder = "paketobuildpacks/builder:base" buildpacks = ["gcr.io/paketo-buildpacks/nodejs"] [http_service] internal_port = 8080 force_https = true auto_stop_machines = true auto_start_machines = true min_machines_running = 0

tip

For real-world applications, flyclt can recognize many popular app frameworks automatically and you wouldn’t this config file.

-

Step 4: Launch the app

fly launch --generate-name --copy-config --yes

Get your hands dirty with Fly.io

- Check the app status with

fly status - See the app logs with

fly logs, or https://fly-metrics.net - Scale the numbers of servers up & down with

fly scale - (Make a change then) Deploy a new version of the app with

fly deploy

note

When working with the cloud, make a habit of undeploy any things you don’t need anymore.

- For fly.io, it’s by using

fly apps destroy <NAME>

How PaaS stacks up

A Paas provides:

- not just the low-level primitives, e.g. the servers “🖥️”

- but also the high-level primitives - powerful functionalyity out-of-the-box, such as:

- ⬆️⬇️ Scaling servers

- 🌐 Domain names

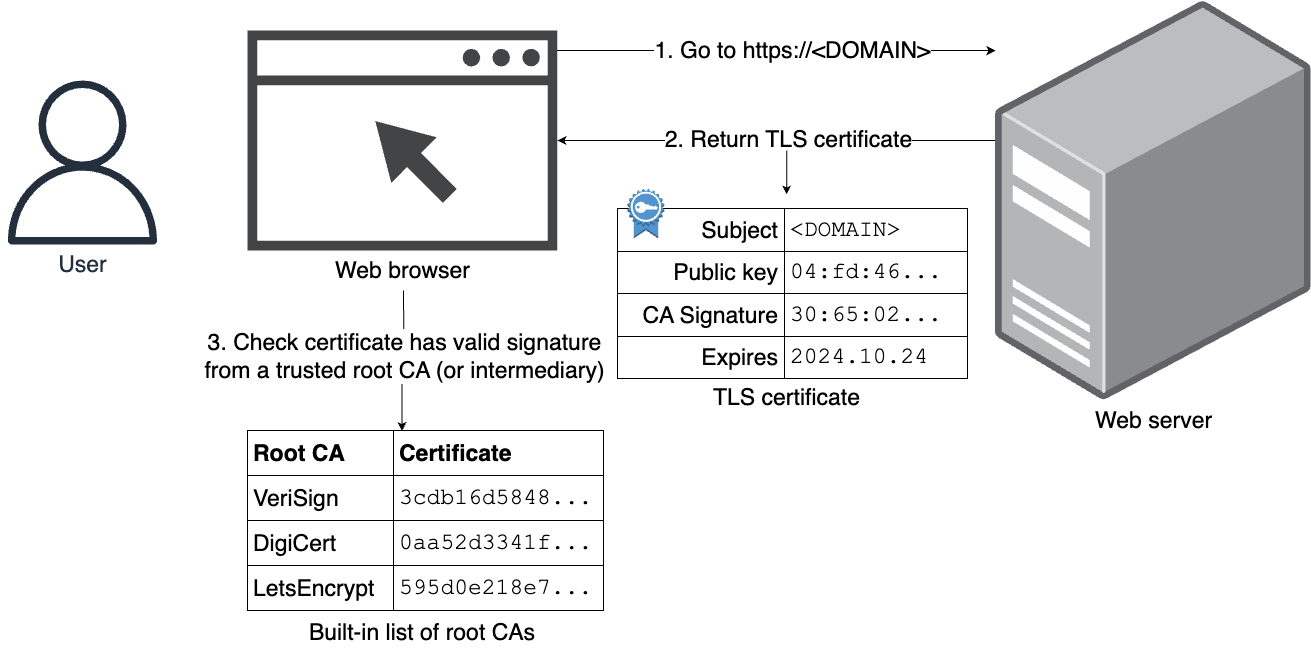

- 🔒 TLS certificates & termination

- 📊 Monitoring

- 🤖 Automated deployment

These high-level primitives is what make PaaS magic - it just works.

In a matter of minutes, a good PaaS take care of so many software delivery concern for you.

warning

The magic of PaaS is also the greatest weakness of PaaS.

- Everything is happenning behind the scenes. If something doesn’t work, it can be hard to debug/fix it.

- There is a lot of limitation:

- What you can deploy

- What types of apps you can run

- What sort of access you can have to the underlying hardware

- What sort of hardware is available

- …

note

Many projects start on PaaS, then

- migrate to IaaS if they grow big enough and require more control.

Deploying an App Using IaaS

-

There are 3 types of IaaS: VPS, CDN, cloud providers:

IaaS type Description Example VPS Providers - Provide access to the Virtual Private Servers (VPSs) as cheap as possible Hetzner, DigitaOcean, Vultr… - aka VPS Hosting Providers, might offer other features, e.g. networking, storage… CDN Providers - Provide access to Content Delivery Network - CDN servers2 CloudFlare, Akamai, Fastly - Might also offer: DDoS protection… Cloud Providers - Very large companies provides general-purpose cloud solutions for everything: VPS, CDN, serverless, edge computing, data/file storages… Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP) Alibaba Cloud, IBM Cloud -

In general, VPS and CDN providers are

-

specialists in their respective area,

-

so they will beat a general cloud in term of features & pricing in those areas.

e.g. A VPS from Hetzner is usually much faster & cheaper than from AWS.

-

-

if you only need the features in their area, better off going with them.

-

-

If you are

- building the infrastructure for the entire company,

- especially one that is in later stages of its DevOps evolution,

- your architecture usually needs many types of infrastructure

- the general-purpose cloud providers will typical a better fit.

- building the infrastructure for the entire company,

Example: Deploying an app using AWS

-

Step 1: Sign up for AWS

After you signed up,

- you initially sign in as the

root user, which has full permissions to do anything in the AWS account. - you can create

IAM user- which is more-limited user account within your AWS account.

[!WARNING] Never use your AWS

root userfor daily tasks. - you initially sign in as the

-

Step 2: Create an IAM user.

Use the Identity and Access Management (IAM) service to:

- create an IAM user

- manage IAM users

- add permissions to that IAM user via IAM policy, which can be attached

- directly to the IAM user

- or via IAM group

- add permissions to that IAM user via IAM policy, which can be attached

After you create an IAM user, AWS will show you the security credentials for that users: 1. Sign-in URL, 2. Username, 3. Console password.

[!TIP] The password is called console password because it’s used for signing in to the AWS Management Console - the web application that manage your AWS account.

[!TIP] Keep both the root user’s password and IAM user’s password in a password manager, e.g.

1Password,BitWarden -

Step 3: Login as the IAM user.

Go the the sign-in URL and sign in with the IAM user credential.

[!TIP] The sign-in URL is unique for each AWS account.

In other words, each AWS account has it own authenticated & authorization system.

-

Step 4: Deploy an EC2 instance.

Use the AWS Elastic Compute Computing (EC2) Service to deploy an EC2 instance:

-

Click

Launch instance -

Fill in name of the instance

-

Choose the Application & OS Images (Amazon Machine Image - AMI)

- Use the default -

Amazon Linux

- Use the default -

-

Choose the Instance type, which specifies the type of server: CPU, memory, hard drive…

- Use the default -

t2.microort3.micro(Small instance with 1 CPU, 1GB of memory that including in AWS free tier)

- Use the default -

-

Choose

Proceed without a key-pairbecause you’re not going to use SSH for this example -

Configure Network settings:

-

Use the default settings:

- Network:

Default VPC - Subnet: No preference -

Default subnet

- Network:

-

Firewall (Security group): Choose

Create security groupwith the rules:- Disable

Allow SSH traffic from - Enable

Allow HTTP traffic from the internet<- This allows inbound TCP traffic on port 80 so the example app can receive requests and response with “Hello, World!”

[!NOTE] By default, EC2 instances have firewalls, called security groups that don’t allow any network traffic in or out.

- Disable

-

-

Configure User data:

[!NOTE] User data is a script that will be executed by the EC2 instance the very first time it boots up

Fill in a Bash script that:

- Install

node.js - Get the code for example server (a simple Node server in a file)

- Run the server (and ignore hangup signals by using

nohup)

- Install

-

caution

Watch out for snakes: These examples have several problems

| Problem | What the example app does | What you should do instead |

|---|---|---|

| Root user | The app is running from user data, which runs as root user. | Run apps using a separate OS user with limited permissions. |

| Port 80 | The app is listening on port 80, which required root user permissions. | Run apps on ephemeral ports - port greater than 1024. |

| User data’s limit | The app put all its code & dependencies in user data, which is limited to 16KB. | User configuration management tool or server templating tools. |

| No process supervision | The app is started by user data script, which only run on the first boot. | Use process supervisors to restart that app if it crashes, or after server reboots. |

| Node.js specifics | The app is run in development mode, which only a have minimum of logging and doesn’t have optimized performance | Run Node.js in production mode3. |

Get your hands dirty with AWS

- Restart your EC2 instance: Does the app still work? Why (not)?

- Create a custom security group opens up port 8080.

- Find logs/metrics about the EC2 instance, compare with monitoring from fly.io.

How IaaS stacks up

Comparing PaaS and IaaS

When to Go with PaaS

tip

Your customers don’t care what kind of CI/CD pipeline you have:

- Whether you’re running a fancy Kubernetes cluster

- Whether you’re on the newest NoSQL database

- …

All they matters is you can create a product that meets your customers’ needs.

important

KEY TAKEAWAY #1.5 You should spend as little time on software delivery as you possibly can, while still meeting your company’s requirements.

- If you can find a PaaS meets your requirements, you should:

- use it & stick with it as long as you can.

- avoid re-creating all those software delivery pieces until you absolutely have to.

The following use cases is a good fit for PaaS:

-

🛝 Side projects

Focus all your time on the side project itself, instead of wasting any time to the software delivery process.

-

🚀 Startup & small companies

A startup lives or dies based on its product - something the market wants.

- Invest all the time/resources to the product.

- Only when you’re facing the scaling problem, which means you’ve found your product/market, start thinking of moving of PaaS.

-

🧪 New & experimental projects (at established companies)

Established companies might have invested in IaaS but still have a slow & inefficient software delivery process:

- by using PaaS, you can quickly launch something & iterate on it.

When to Go with IaaS

Only move to IaaS when a PaaS can no longer meet your requirements, which means you’re facing the following problems:

-

🪨 Load & scaling:

When you are dealing with a huge a mount traffic:

- In other words, you’re facing the scaling problem (and have found your product)

- PaaS might no longer meet your requirements:

- The pricing of PaaS might become prohibitively.

- The supported architectures by PaaS is limited

a migrate to IaaS is require to handling that load & scaling.

-

🍕 Company size

For companies with dozens of teams with hundreds or thousands of developers, PaaS offers for governance & access controls might be not enough.

e.g.

- Allow some teams to make changes, but not the others

-

🅱️ Availability

Your business might have a higher level than what PaaS offers for uptime guarantees (SLOs, SLAs)

PaaS offerings are limited in term of visibility & connectivity options, e.g.

- Many PaaS don’t let you SSH to the server, when there is an outage/bug you can’t know what really happening.

[!NOTE] Heroku - the leading PaaS - only supports SSH into a running server after a decade.

-

🛡️ Security & compliance

If your business needs to meet some strict security, compliance requirements - e.g. PCI, GCPR, HIPPA - IaaS might be the only option.

important

KEY TAKEAWAY #1.6 Go with PaaS whenever you can; go with IaaS when you have to.

Conclusion

- Adopt the architecture & software delivery processes that are appropriate for your stage of company

- Adopt DevOps incrementally, as a series of small steps, where each step is valuable by itself

- You should never expose apps running on a PC to the outside world

- Using the cloud should be your default choice for most new deployments these days

- You should spend as little time on software delivery as you possibly can, while still meeting your company’s requirements

- Go with PaaS whenever you can; go with IaaS when you have to

https://www.dell.com/en-us/shop/ipovw/poweredge-r7625

https://world.hey.com/dhh/the-hardware-we-need-for-our-cloud-exit-has-arrived-99d66966

CDN servers are distributed all over the world, to serve & cache content, especially static assets, such as images, CSS stylesheets, JavaScript bundles.

Chapter 2: How to Manage Your Infrastructure as Code

ClickOps and IaC

ClickOps

ClickOps : clicking through an web UI of a cloud provider’s website to configure computing infrastructure

The problems of ClickOps:

- Deployments are slow & tedious → You can’t deploy more often

- Deployments are error-prone → Bugs, outages…

- Only one person knows how to deploy → If that person is overloaded, everything takes ages; there is also bus factor

Infrastructure as Code

Infrastructure as Code (IaC) : You write & execute code to define, deploy, update, destroy your infrastructure : This marks a shift in mindset in which : - all aspects of operations are treated as software : - even those represent hardware, e.g. setting up a server

-

With modern DevOps, you can manage almost everything as code:

Task How to manage as code Example Chapter Provision servers Provisioning tools Use OpenTofuto deploy a serverThis chapter (2) Configure servers Configuration management & templating tools Use Packerto create an image of a serverThis chapter (2) Configure apps Configuration files & services Read configuration from a JSONfile during bootConfigure networking Provisioning tools, service discovery Use Kubernetes’s service discoveryBuild apps Build systems, continuous integration Build your app with npmTest apps Automated tests, continuous integration Write automated tests using JestDeploy apps Automated deployment, continuous delivery Do arolling deployment with KubernetesChapter 3 Scale apps Auto scaling Set upauto scaling policies in AWSChapter 3 Recover from outages Auto healing Set upliveness probes in KubernetesChapter 3 Manage databases Schema migrations Use Flywayto update your database schemaTest for compliance Automated tests, policy as code Check compliance using Open Policy Agent (OPA) -

For infrastructure, there are 4 type of IaC tools:

IaC tool Example Ad-hoc scripts Use a Bashscript to deploy a server.Configuration management tools Use Ansibleto deploy a server.Server templating tools Use Packerto create an image of a server.Provision tools Use OpenTofuto deploy a server.

The Benefits of IaC

When your infrastructure is defined as code:

- the entire deployment process can be automated

- you can apply software engineering practices (to your software delivery processes)

which bring a lot of benefits:

| How? | The benefit | |

|---|---|---|

| 🤳 Self-service | Code → Automated | Developers can kickoff their own deploy whenever necessary |

| 💨 Speed & safety | Code → Automated → Computers do it better than human | Deployments can be significantly faster; consistently & not prone to manual error |

| 📚 Documentation | The state of your infrastructure is in the source code | Every one can understand how things work |

| 🏷️ Version control | The infrastructure (as code) can be tracked by a version control, e.g. git | The entire history of infrastructure is now in the commit log. |

| ✅ Validation | The state of your infrastructure can be*“tested” just as code* | You can perform: code review, automated tests, analysis tools |

| 🔁 Reuse | Your infrastructure can be packaged intoreusable modules | You can easily build your infrastructure on top of documented, batted-tested modules. |

| 😀 Happiness | IaC allows both computer & developers to what they do best (automation & coding) | Everyone is happy. No more repetitive & tedious deploy task. |

Ad Hoc Scripts

What is Ad Hoc Script

ad hoc (ad-hoc) : (adj) arranged or happening when necessary and not planned in advance

ad hoc script : code written in a scripting language - e.g. Bash/Ruby/Python - to automate a task you were doing manually

Example: Deploy an EC2 Instance Using a Bash Script

In this example, you will automate all the manual steps, in example in chap 1 that deploy an app using AWS.

-

Migrate the user data

cd examples mkdir -p ch2/bashcp examples cp ch1/ec2-user-data-script/user-data.sh ch2/bash/ -

Write the Bash script to deploy an app using AWS

- Create security group

- Create rule for that security group

- Run the instance

- Get the public ip of the instance

- Print: instance id, security group id, public ip

# examples/ch2/bash/deploy-ec2-instance.sh # TODO

caution

Watch out for snakes: these are simplified examples for learning, not for production

Get your hands dirty: Ad hoc scripts

-

What happens if you run the Bash script a second time?

- Do you get a error?

- If so, why?

-

How would you have to tweak the script if you wanted to run multiple EC2 instances?

1.i. If the script is run the second time, there will be an error. 1.i. Because in a VPC - the default VPC in this case - the security group’s name need to be unique.

- To have multiple EC2, you can duplicate the whole script an change the name of the security-group.

warning

When you’re done experimenting with the script, you should manually un-deployed the EC2 instance by using the EC2 Console

How Ad Hoc Scripts Stack Up

| IaC category criteria | Ad Hoc script | Example |

|---|---|---|

| CRUD1 | - Only handle basically create<br> - Hard to have full CRUD | If you run the script a second time, the script will try to<br>- create a new security group<br>- without knowing that the security group is already exists. |

| Scale | Scale Hard, need to figure everything out yourself | - Keep track of everything<br> - Connect everything together <br> - Deployment strategies. |

| Idempotency2 & error handling | Most ad hoc scripts:<br> - are not idempotent <br> - don’t handle errors gracefully | - A script runs → Error → Partial state → Forget what it has done → Rerun the script → Another error. |

| Consistency | No consistency | You can:<br> - use any programming language you want<br> - write the code however you want. |

| Verbosity | Very verbose | You need to do everything yourself (CRUD, idempotency, error handling), which make the code very verbose. |

important

Key takeaway #2.1: Ad hoc scripts are

- great for small, one-off tasks,

- but not for managing all your infrastructure as code.

Configuration Management Tools

What is Configuration Management Tools

Configuration Management Tools : e.g. Chef, Puppet, Ansible : Appear before cloud computing → Designed with the assumption that: : - someone else had set up the hardware, e.g. Ops team racked the servers in data center. : - primary purpose is to handle the software - configure the servers: OS, dependencies, your app (deploy, update).

note

The configuration management tools can also deploy & manage servers or other infrastructure.

How Configuration Management Tools work

-

Most configuration tools makes changes directly on a set of server you specify, which is called mutable infrastructure paradigm:

- The same long-running servers will be mutate over & over again, over many years.

-

To be able to make changes on these servers, you need 2 things: something to drive the changes & a way to connect to the server.

Chef, Puppet Ansible Something to drive the changes You run amaster server(s) You use an CLI ➕ Can have areconciliation loop: check & match the desired configuration ➕ Can run anywhere (dev PC, build server…) A way to connect to the server Viaagent software that installed on each server Via SSH ➖ Need to install the agent ➖ Need to open extra port [!WARNING] Chicken-and-egg 🐥🥚 problem You have a tool that configure your servers:

- before you can use that tool

- you need to configure your servers.

- before you can use that tool

Example: Deploy an EC2 Instance Using Ansible

note

This example use Ansible to deploy an EC2 instance so you can have a server to use the configuration management tool - Ansible.

warning

Although configuration tools can also deploy & manage servers:

- they’re not originally designed to that.

For this example, spinning up a single server for learning & testing, Ansible is good enough.

tip

Before start this example, you can read the docs about the basic concepts in Ansible.

See:

- https://docs.ansible.com/ansible/latest/getting_started/index.html

- https://docs.ansible.com/ansible/latest/getting_started/basic_concepts.html

To deploy an EC2 instance using Ansible, you need to:

-

Define an Ansible playbook3

- in Ansible’s domain specific language (DSL), which is based on YAML.

- to tell Ansible to do what you want:

- create a security group

- create an EC2 key-pair (& save it)

- create the EC2 instance (& tag it)

# examples/ch2/ansible/create_ec2_instance_playbook.yml # TODO

Example: Configure a Server Using Ansible

- To let Ansible know which servers it needs configure, you provide an inventory4 that:

-

Specify a list of static IP addresses of the servers (in group).

e.g.

webservers: # A group of servers named webservers hosts: 10.16.10.1: dbservers: # A group of servers named dbservers hosts: 10.16.20.1: 10.16.20.2:- Now, you can use Ansible playbook to target the servers in those 2 groups:

webservers,dbservers

- Now, you can use Ansible playbook to target the servers in those 2 groups:

-

Use an inventory plugin to dynamically discover your servers with IP addresses that change frequently.

-

e.g.

-

Use the aws_ec2 inventory plugin to discovered EC2 instance on AWS

# examples/ch2/ansible/inventory.aws_ec2.yml plugin: amazon.aws.aws_ec2 regions: - us-east-2 keyed_groups: - key: tags.Ansible # 1️⃣ leading_separator: "" # 2️⃣ -

1️⃣: Ansible will create groups bases on the value of the tag

Ansible -

2️⃣: By default, Ansible adds a leading underscore to the group names. This disables it so the group name matches the tag value.

-

-

For each group (of servers) in the inventory, you can specify group variables5 to configure how to connect to the servers in that group.

# examples/ch2/ansible/group_vars/ch2_instances.yml ansible_user: ec2-user # The user Ansible ‘logs in’ as. ansible_ssh_private_key_file: ansible-ch2.key ansible_host_key_checking: false # Turn off host key checking so Ansible don't prompt you

-

- To let Ansible know what to do (with the servers), you provides a playbook (that specifies the roles6 of these server).

-

The playbook

# examples/ch2/ansible/configure_sample_app_playbook.yml - name: Configure the EC2 instance to run a sample app hosts: ch2_instances # Target the servers in group ch2_instances - the one created in previous example, grouped by the inventory plugin gather_facts: true become: true roles: - sample-app # Configure the server using an Ansible role called sample-app -

The role:

-

Tasks

# ch2/ansible/roles/sample-app/tasks/main.yml - name: Add Node packages to yum shell: curl -fsSL https://rpm.nodesource.com/setup_21.x | bash - # 1️⃣ - name: Install Node.js yum: name: nodejs # 2️⃣ - name: Copy sample app copy: # 3️⃣ src: app.js # Relative path to the role's files directory dest: app.js # Relative path on the server - name: Start sample app shell: nohup node app.js &- 1️⃣: Use the

shellmodule to installyum - 2️⃣: Use the

yummodule to installnodejs - 3️⃣: Use the

copymodule to copyapp.jsto the server.

- 1️⃣: Use the

-

Files

Copy

app.jsfrom chapter 1 toexamples/roles/sample-app/files/app.js

-

- The final structure of the example

.

├── configure_sample_app_playbook.yml

├── group_vars

│ └── ch2_instances.yml

├── inventory.aws_ec2.yml

└── roles

└── sample-app

├── files

│ └── app.js

└── tasks

└── main.yml

- Run the playbook

tip

Don’t forget to authenticate to AWS on the command line.

ansible-playbook -v -i inventory.aws_ec2.yml configure_sample_app_playbook.yml

Output

PLAY RECAP

xxx.us-east-2.compute.amazonaws.com : ok=5 changed=4 failed=0

Get your hands dirty with Ansible

- What happens if you run the Ansible playbook a second time? How does this compare to running the Bash script a second time?

- How would you have to tweak the playbook if you wanted to run multiple EC2 instances?

- Figure out how to use the SSH key created by Ansible (

ansible.key) to manually SSH to your EC2 instance and make changes locally.

warning

When you’re done experimenting with Ansible, you should manually un-deployed the EC2 instance by using the EC2 Console

How Configuration Management Tools Stack Up

| Aspect | Configuration Management Tools | Explain, examples |

|---|---|---|

| CRUD | Most supports 3/4 CRUD operation: | |

| - Create | ✅ | |

| - Read | 😢 Hit or miss, e.g. For Ansible, you need to manually give each resource a unique name or tag | |

| - Update | 😢 Hit or miss | |

| - (Don’t support delete) | ❌ | |

| Scale | - Designed for managing multiple servers. | Increase the number of instances, and Ansible will configure all of them. |

| - Some has builtin support forrolling deployments | If you have 20 servers → update Ansible role → re-run Ansible → Ansible rolls out the change in batch, and ensure no downtime. | |

| Idempotency & error handling | Some tasks are idempotent | yum |

| Some task are not idempotent | Some task using shell module | |

| Consistency | Consistent, predictable structure code with conventions. | Docs, file layout, named parameters, secret managements… |

| Verbosity | Concise ← DSL | The Ansible code may have the same length with Bash, but handles a lot of things: CRU, scaling… |

Drawbacks of configuration management tools

- Setup cost

- Configuration drift due to mutable infrastructure paradigm: each long-running server can be a little different from the others.

Immutable infrastructure paradigm

With immutable infrastructure paradigm:

- Instead of long-running physical servers,

- you use short-lived virtual servers (that will be replaced every time you do an update).

- Once you’ve deployed a server, you’ve never make changes to it again.

- If you need to update something, even it’s just a new version of your application

- you deploy a new server.

- If you need to update something, even it’s just a new version of your application

tip

Cattle vs pets

| Cattle | Pet | |

|---|---|---|

| Examples | 🐄🐃 | 🐶🐱 |

| Paradigm | Immutable infrastructure | Mutable infrastructure |

| What it mean? | Treat a server like a cattle: - each one is indistinguishable to others, with random, sequential IDs - kill them off & replace them regularly | Treat a server like a pet: - give it unique name - (take care of it) & keeps it alive as long as possible |

note

Immutable infrastructure paradigm is inspired by:

- Function programming:

- Variables are immutable

- After you set a variable to a value, you can’t change that variable again.

- If you need to update something, you create a new variable.

- It’s a lot easier to reason about your code.

- Variables are immutable

important

Key takeaway #2.2

Configuration management tools are

- great for managing the configuration of servers,

- but not for deploying the servers themselves, or other infrastructure.

Server Templating Tools

What is Server Templating Tools

Server Templating Tools : e.g. Docker, Packer, Vagrant : instead of: : 1. launching servers : 2. configure them (by running the same code on each) : you: : 1. create an image of a server that captures a fully self-contained “snapshot” of the operating system (OS), the software, the files, and all other relevant details. : 2. use some other IaC to install that image on all of your servers.

Two types of image tools - Virtual machine and container

Virtual machine

virtual machine (VM) : a VM emulates an entire computer system, including the hardware (and of course the software)

VM image : the blueprint for a VM : defined with tools: Packer, Vagrant

hypervisor : aka virtualizer : a type of computer software/firmware/hardware that creates & runs virtual machines.

-

You run a hypervisor7 with the VM image to create a VM that virtualize/emulate

- the underlying hardware: CPU, memory, hard driver, networking…

- the software: OS, dependencies, apps…

-

Pros and cons of VM:

VM Pros - Each VM is fully isolated from the host machine & other VM. <- Can run any 3rd-party code without worry of malicious actions - All VMs from the same VM image will run exactly the same way in all environments. e.g. Your PC, a QA server, a production server. Cons - Overhead of CPU/memory usage. <- For each VM, the hypervisor needs to virtual all hardware & running a guest OS … - Overhead of startup time. <- … that whole OS needs to start.

Container

container : a container emulates the user space8 of an OS

container image : the blueprint for a container

container engine : a Container Engine takes a Container Image : - (simulates an user space with memory, mount points & networking) : - turns it into a Container (aka running processes) : e.g. Docker, cri-o, Podman

| VM | ||

|---|---|---|

| Pros | - Each container is partial isolated from the host machine & other containers. | <- ☑️ Good enough to run your application code. |

| - All containers from the same container image will run exactly the same way in all environments. | e.g. Your PC, a QA server, a production server. | |

| - No overhead of CPU/memory usage & startup time. | <- For all containers, the container engine only needs to virtual a user space (instead of all hardware & a guest OS) | |

| Cons | - Each container is only partial isolated from the host machine & other containers. | <- ❌ Not good enough to run any 3rd-party code without worry about malicious actions. |

Example: Create a VM Image Using Packer

In this example, you will use Packer to create a VM image for AWS (called an Amazon Machine Image - AMI)

-

Create a Packer template

# examples/ch2/packer/sample-app.pkr.hcl packer { required_plugins { # 0️⃣ amazon = { version = ">= 1.3.1" source = "github.com/hashicorp/amazon" } } } source "amazon-ebs" "amazon_linux" { # 1️⃣ ami_name = "sample-app-packer-${uuidv4()}" ami_description = "Amazon Linux 2023 AMI with a Node.js sample app." instance_type = "t2.micro" region = "us-east-2" source_ami = "ami-0900fe555666598a2" ssh_username = "ec2-user" } build { # 2️⃣ sources = ["source.amazon-ebs.amazon_linux"] provisioner "file" { # 3️⃣ source = "app.js" destination = "/home/ec2-user/app.js" } provisioner "shell" { # 4️⃣ inline = [ "curl -fsSL https://rpm.nodesource.com/setup_21.x | sudo bash -", "sudo yum install -y nodejs" ] pause_before = "30s" } }- 0️⃣ - Plugin: Use the

Amazonplugin9 to build Amazon Machine Image (AMI) - 1️⃣ - Builder: Use the

amazon-ebsbuilder to create EBS-backed AMIs by- (launching a source AMI)

- (re-packaging it into a new AMI after provisioning10)

- 2️⃣ - Build steps:

- After provision the EC2 instance, Packer connects to the server and runs the build steps in the order specified in the Packer template.

- (When all the builds steps have finished, Packer will take a snapshot of the servers and use it to create an AMI)

- 3️⃣ - File provisioner: Copy the files to the server.

- 4️⃣ - Shell provisioner: Execute shell commands on the server.

[!NOTE] The Packer template is nearly identical to the Bash script & Ansible playbook,

- except it doesn’t actually run the app.

- 0️⃣ - Plugin: Use the

-

Install Packer

-

Install Packer plugins (used in the Packer template)

packer init sample-app.pkr.hcl[!NOTE] Packer can create images for many cloud providers, e.g. AWS, Azure, GCP. The code for each providers is

- not in the Packer binary itself

- but in a separate plugin (that the

packer initcommand can install)

-

Build image from Packer template

packer build sample-app.pkr.hclOutput

==> Builds finished. The artifacts of successful builds are: --> amazon-ebs.amazon_linux: AMIs were created: us-east-2: ami-XXXXXXXXXXXXXXXXX- The

ami-XXXvalue is the ID of the AMI that was created from the Packer template.

[!NOTE] The result of running Packer is not a server running your app, but the image of the server.

- This image will be used by another IaC tolls to launch one or more servers (running the image)

- The app will be run when the image is deployed (or the server is launched).

- The

Get your hands dirty with Packer

-

What happens if you run packer build on this template a second time? Why?

-

Figure out how to update the Packer template so it builds images that

- not only can run on AWS,

- but also can run on other clouds (e.g., Azure or GCP)

- or on your own computer (e.g., VirtualBox or Docker).

How Server Templating Tools Stack Up

| Aspect | Server Templating Tools | |

|---|---|---|

| CRUD | Only supports Create | → Create’s all a server templating tool needs11 |

| Scale | Scale very well | e.g. The same image can be used to launch 1 or 1000 servers. |

| Idempotency & error handling | Idempotent by design | → If there is an error, just rerun & try again. |

| Consistency | Consistent, predictable structure code with conventions. | e.g. Docs, file layout, named parameters, secret managements… |

| Verbosity | Very concise | ← Use an DSL; don’t have to deal with all CRUD operations; idempotent “for free” |

warning

Server templating tools cannot be used in isolated (because it only supports create).

- If you use a server templating tool, you need another tool to support all CRUD operations, e.g. a provisioning tool

note

All server templating tools will create images but for slightly different purposes:

- Packer: create VM images run on production servers, e.g. AMI

- Vagrant: create VM images run on development computers, e.g. VirtualBox image

- Docker: create container images of individual applications, which can be run any where as long as that computer has installed an container engine.

important

Key takeaway #2.3 Server templating tools are

- great for managing the configuration of servers with immutable infrastructure practices.

- (but needs to be used with another provisioning tools)

Provisioning Tools

What is Provisioning Tools

provisioning tool : e.g. OpenTofu/Terraform, CloudFormation, OpenStack Heat, Pulumi… : a provisioning tool is responsible for : - deploying : - managing (all CRUD operations) : the servers & other infrastructure in the clouds: : - (servers), databases, caches, load balances, queues, monitoring : - subnet configurations, firewall settings, routing rules, TLS certificates : - …

note

What are the different between ad-hoc script, configuration management tools, server templating tools & provisioning tools?

- Configuration management tools: manage configurations of servers

- Server templating tools: manage configurations of servers with immutable infrastructure practices

- Provisioning tools: deploy & manage the servers (& other infrastructure)

How Provisioning Tools work

Under the hood, provisioning tools work by

- translating the code you write

- into API calls to the cloud providers you’re using

e.g. If you write OpenTofu/Terraform code to create a server in AWS, when you run OpenTofu, it will:

- Parse your code

- (Based on the the configuration you specified,) make a number of APIs calls to AWS

- to create an EC2 instance

note

By making APIs to cloud providers, provisioning tools bring in many advantages:

- You don’t need to setup master servers.

- You don’t need to setup connection to the servers ← Take advantages of the authentication mechanism of cloud providers.

Example: Deploy an EC2 Instance Using OpenTofu

tip

Terraform vs OpenTofu

Terraformis a popular provisioning tool that HashiCorp open sourced in 2014 under Mozilla Public Licenses (MPL) 2.0.- In 2024, HashiCorp switched

Terraformto non-open source Business Source License (BSL).

- In 2024, HashiCorp switched

- As a result, the community fork

Terraformunder the namedOpenTofu, which remains open source under the MPL 2.0 license.

To deploy an EC2 Instance using OpenTofu, you

-

write an OpenTofu module

- in HCL12,

- in configuration files with a

.tfextension (instead of.pkr.hclfor Packer template)

[!NOTE] An OpenTofu module is a folder with all

.tffiles in that folder:- No matter are the name of these

.tffiles. - But there are some conventions, e.g.

main.tf: Main resourcesvariables.tf: Input variablesoutputs.tf: Output variables

-

use that OpenTofu module (run OpenTofu code) to deploy the EC2 instance.

For this example, the OpenTofu module for an EC2 instance looks like this:

-

main.tf: Main resources# examples/ch2/tofu/ec2-instance/main.tf provider "aws" { # 1️⃣ region = "us-east-2" } resource "aws_security_group" "sample_app" { # 2️⃣ name = "sample-app-tofu" description = "Allow HTTP traffic into the sample app" } resource "aws_security_group_rule" "allow_http_inbound" { # 3️⃣ type = "ingress" protocol = "tcp" from_port = 8080 to_port = 8080 security_group_id = aws_security_group.sample_app.id cidr_blocks = ["0.0.0.0/0"] } resource "aws_instance" "sample_app" { # 4️⃣ ami = var.ami_id # 4️⃣1️⃣ instance_type = "t2.micro" vpc_security_group_ids = [aws_security_group.sample_app.id] user_data = file("${path.module}/user-data.sh") # 4️⃣2️⃣ tags = { Name = "sample-app-tofu" } }What the OpenTofu code do?

-

1️⃣ - Use AWS provider: to work with AWS cloud provider.

[!NOTE] OpenTofu can works with many providers, e.g. AWS, Azure, GCP…

- An OpenTofu provider is like a Packer plugin.

[!TIP] AWS has data centers all over the world, grouped into regions.

- An AWS

regionis a separate geographic area, e.g.us-east-1(Virginia),us-east-2(Ohio),eu-west-1(Ireland),ap-southeast-1(Singapore)- Within each region, there are multiple isolated data centers, called

Availability Zones(AZs)

- Within each region, there are multiple isolated data centers, called

-

2️⃣ - Create a security group: to control the network traffic go in & out the EC2 instance

[!NOTE] For each type of provider, there are

- several kinds of resources that you can create

- e.g. servers, databases, load balancers, firewall settings…

The syntax for creating a resource (of a provider) in OpenTofu is as follows:

-

resource "<PROVIDER>_<TYPE>" "<NAME>" { [CONFIG ...] }with:

PROVIDER: name of the provider, e.g.awsTYPE: type of the resource (of that provider) to create, e.g.instance(an AWS EC2 instance)NAME: an identifier you can use in OpenTofu code to refer to this resource, e.g.my_instanceCONFIG: one or moreargumentsthat specific to that resource.

- several kinds of resources that you can create

-

3️⃣ - Create a rule for the security group: to allow inbound HTTP request on port 8080.

-

4️⃣ - Create an EC2 instance: that uses the previous security group, and have a

Nametag ofsample-app-tofu.- 4️⃣1️⃣ - **Set the AMI**: to `var.ami_id`, which is a reference to an `input variable` named `ami_id` in `variables.tf`. - 4️⃣2️⃣ - **Set the user data**: to a file named `user-data.sh`, which is in the OpenTofu module's directory, next to other `.tf` files.

-

-

variables.tf: Input variables# examples/ch2/tofu/ec2-instance/variables.tf variable "ami_id" { description = "The ID of the AMI to run." type = string }[!NOTE] The input variables allow an OpenTofu module

- to be customized when that module is used to provision resources.

Example explain

- The input variable

ami_idallow you to pass in the ID of an AMI that will be used to run the EC2 instance.- You will pass in

IDof the AMI you build Packer template in previous section.

- You will pass in

-

outputs.tf: Output variables# examples/ch2/tofu/ec2-instance/outputs.tf output "instance_id" { description = "The ID of the EC2 instance" value = aws_instance.sample_app.id } output "security_group_id" { description = "The ID of the security group" value = aws_security_group.sample_app.id } output "public_ip" { description = "The public IP of the EC2 instance" value = aws_instance.sample_app.public_ip }[!NOTE] The output variables can be used to log & share values betweens OpenTofu modules.

-

(Not about OpenTofu) The application & the user data

-

The application: is already included in the AMI (built from the Packer template in previous section).

-

The EC2 instance user data (to start the app)

# examples/ch2/tofu/ec2-instance/user-data.sh #!/usr/bin/env bash nohup node /home/ec2-user/app.js &

-

After writing the OpenTofu module code, you need to run that module code to deploy the EC2 instance:

-

Install OpenTofu

-

Install any providers used in OpenTofu code

tofu init -

Apply the OpenTofu code to deploy the EC2 instance

-

Run the

applycommandtofu apply -

The

tofu applycommand will prompt you for theami_idvalue and you paste in the value via the CLIvar.ami_id The ID of the AMI to run. Enter a value:Alternative to provide the values via the CLI prompt, you can do it via

-varflag, environment variables, or variable definitions file.-

-varflag:tofu apply -var ami_id=<YOUR_AMI_ID> -

Environment variable

TF_VAR_<var_name>export TF_VAR_ami_id=<YOUR_AMI_ID> tofu apply -

Variable definition file (a file named

terraform.tfvars)-

Define

terraform.tfvars# ch2/tofu/ec2-instance/terraform.tfvars ami_id = "<YOUR_AMI_ID>" -

Run

tofu applyand OpenTofu will automatically find theami_idvalue.

-

-

-

The

tofu applycommand will then-

show you the

execution plan(planfor short)…OpenTofu will perform the following actions:…Details of the actions…

# aws_instance.sample_app will be created + resource "aws_instance" "sample_app" { + ami = "ami-0ee5157dd67ca79fc" + instance_type = "t2.micro" ... (truncated) ... } # aws_security_group.sample_app will be created + resource "aws_security_group" "sample_app" { + description = "Allow HTTP traffic into the sample app" + name = "sample-app-tofu" ... (truncated) ... } # aws_security_group_rule.allow_http_inbound will be created + resource "aws_security_group_rule" "allow_http_inbound" { + from_port = 8080 + protocol = "tcp" + to_port = 8080 + type = "ingress" ... (truncated) ... }Plan: 3 to add, 0 to change, 0 to destroy. Changes to Outputs: + instance_id = (known after apply) + public_ip = (known after apply) + security_group_id = (known after apply)[!NOTE] The plan output is similar to the output of the

diffcommand of Linux andgit diff:Anything with:

- a plus sign (

+) will be created - a minus sign (

–) will be deleted - a tilde sign (

~) will be modified in place

[!TIP] The plan output can also be generated by running

tofu plan. - a plus sign (

-

…prompt you for confirmation

Do you want to perform these actions? OpenTofu will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value: -

If you type

yesand hitEnter, OpenTofu will proceed:Enter a value: yesOutput