Chapter 9: How to Store Data: SQL, NoSQL, Queues, Warehouses, and File Stores

-

Almost all software needs to store data.

-

For most companies, data is one of the most valuable, longest-lived assets.

-

There are many types of data and many different ways to store them:

Type of data / data store How to store? Local storage If your application needs to store data locally, you write it to a hard drive. Primary data store The general-purpose workhorse and the source of truth for most companies is the relational database. Caching If you need to speed up data retrieval, you can cache responses in key-value stores and content distribution networks (CDNs). File storage To store and serve files, you turn to file servers & object stores. Semi-structured data and search If you need to store non-uniform data or to search and filter that data, you turn to a document store. Analytics To extract insights from your data, you turn to columnar databases. Asynchronous processing To process data and events in the background, and to decouple your systems, you turn to queues and streams. -

To meet scalability & availability requirements, you use partitioning & replication.

-

To ensure your data isn’t lost in a disaster scenario, you use snapshots, continuous backups, and replication.

This chapter will walk you through various hands-on examples:

- deploying a PostgreSQL database, automating schema migrations

- serving files from S3, and using CloudFront as a CDN

- configuring backups and replication

Local Storage: Hard Drives

The challenges of storing data using custom file format

-

Querying the data

It’s hard to extract insights from data.

-

Evolving the data format

It’s hard to evolve the data format without incompatible issues with older files.

-

Handling concurrent access to the data

It’s impossible to reading/writing the data from different computers.

warning

Storing data using custom file format directly on local storages is usually a bad idea if the software requirements need to be changed.

Stateful and stateless software

stateful software : Software that reads & writes persistent data to the local hard drive. : - Use custom format for data, stored them as files in local hard drive.

stateless software : Software that does not rely on persistent data on the local hard drive. : Persistent data is stored in a dedicated data store. : - The only stateful system in your software architecture. : Easier to deploy, update, scale, and maintain.

note

Both type of software can still write ephemeral data1 - e.g. log files - to local hard drives.

[!IMPORTANT] Key takeaway #1 Keep your applications stateless. Store all your data in dedicated data stores.

Types of hard drives

| Storage Type | Where? | Description | Examples | Protocols / Technologies | Pros | Cons |

|---|---|---|---|---|---|---|

| Physical Hard Drives | On-premises | Hard drives physically attached to on-prem servers. | Magnetic, SSD.. | - SATA, NVMe… - RAID | Direct control, fast access | Requires on-prem infrastructure |

| Network-attached Hard Drives | Cloud | Hard drives attached to cloud VMs over the network. | Amazon EBS 2 Google Persistent Disk Azure Disk Storage | Detachable, re-attachable3 for VMs (for stateful apps) | Higher latency compared to local drives | |

| Shared Hard Drives | Cloud, on-premises | Single drive shared among multiple servers for shared access. | Amazon EFS 4 Google Cloud Filestore Azure Files | Network File System (NFS) Common Internet File System (CIFS) Server Message Block (SMB) | Shared access5 for multiple servers | Higher latency compared to local drives |

| Volumes in Container Orchestration6 | Cloud, on-premises | Persistent volumes7 for data storage in container environments. | Amazon EBS (AWS EKS) Google Persistent Disk (GKE) Azure Disk Storage (AKS)Local disk, e.g. Docker Desktop | Data persists7 even when containers are redeployed | ||

| ______________________________ | ________________________ | _____________________________________________ | __________________________________ | __________________________________________ | __________________________________________________ | _____________________________________________ |

tip

Whether you’re using a physical hard drives, or any other types of storages, all look and behave exactly like a local, physically-attached hard drive:

- To your software, it looks like any local file system that you can read from and write to.

[!WARNING] Don’t run data stores in containers

You’re one config mistake from losing your company’s data - the most valuable asset.

Containers are designed to be easy to distribute, scale, and throw away (hence the default of ephemeral disks), which

- is great fit for stateless apps and local development

- but is not a good fit for data stores in production

Using persistent volume for data store is not easy:

- Persistent volume

- needs to support varies widely amongst orchestration tools

- is generally less mature than other orchestration features

- Integration with tooling can be trickier (if that tooling wasn’t designed for containers)

- Support from database vendors may be trickier (not all of them support containerized deployments).

Primary Data Store: Relational Databases

relational database : The most dominant data storage solution for decades: : Flexible : - Handle a remarkably wide range of use cases8 : - Remarkable scalability & availability : Reliable : - Ensure data integrity & consistency : - Store data efficiently (temporally & spatially) : - Strong security model : The most mature9 data storage technology available : - Massive ecosystem of tools, vendors, expert developers

note

Most companies use relational databases as their primary data stores — the source of truth for their data.

Just as cryptography:

-

always use mature, battle-tested, proven off-the-shelf solutions.

-

Don’t roll out your own data store, except if you have:

- use cases that all existing data stores can’t handle, which only happens at massive scale, e.g. Google, Facebook, Twitter

- at least a decade10 to spare

[!IMPORTANT] Key takeaway #2 Don’t roll your own data stores: always use mature, battle-tested, proven off-the-shelf solutions.

Writing & Reading Data

A relational database

- stores data in tables, where

- each item is stored in a row,

table : represents a collection of related items

row : represents an item

note

Each row in a table has the same columns

e.g. A website for a bank store data about the customers

-

in a

customerstable, where -

each row represents one customer as a tuple of

id,name,date_of_birth, andbalanceid name date_of_birth balance 1 Brian Kim 1948-09-23 1500 2 Karen Johnson 1989-11-18 4853 3 Wade Feinstein 1965-02-29 2150

To interact with a relational database, you use a language called Structured Query Language (SQL).

-

To write data in to a table, you use the

INSERT INTOstatementINSERT INTO <table> ( <columns> ) VALUES ( <values> );e.g.

-

INSERT INTO customers (name, date_of_birth, balance) VALUES ('Brian Kim', '1948-09-23', 1500); INSERT INTO customers (name, date_of_birth, balance) VALUES ('Karen Johnson', '1989-11-18', 4853); INSERT INTO customers (name, date_of_birth, balance) VALUES ('Wade Feinstein', '1965-02-25', 2150); -

(This example assume the schema is already exists)

[!NOTE] Relational databases require you to define a schema to describe the structure of each table before you can write any data to that table (as in Schemas and Constraints).

-

-

To read all data from a table, you use

SELECTstatement to form an SQL query.SELECT <columns> FROM <table>;[!NOTE] Use the wildcard

*for all columnse.g.

-

SELECT * FROM customers;id | name | date_of_birth | balance ----+----------------+---------------+--------- 1 | Brian Kim | 1948-09-23 | 1500 2 | Karen Johnson | 1989-11-18 | 4853 3 | Wade Feinstein | 1965-02-25 | 2150

-

-

To read and keep only some of the data (aka filtering query), you use

SELECTstatement with aWHEREclause:SELECT <columns> FROM <table> WHERE <conditions>;e.g.

-

SELECT * FROM customers WHERE date_of_birth > '1950-12-31';id | name | date_of_birth | balance ----+----------------+---------------+--------- 2 | Karen Johnson | 1989-11-18 | 4853 3 | Wade Feinstein | 1965-02-25 | 2150

-

tip

Relational databases allow query data in countless ways:

WHEREto filter dataORDER BYto sort dataGROUP BYto group dataJOINto query data from multiple tablesCOUNT,SUM,AVG, and a variety of other aggregate functions to perform calculations on your data,- indices to make queries faster,

- and much more.

[!WARNING] Watch out for snakes: SQL has many dialects SQL:

- In theory, is a language standardized by ANSI and ISO that is the same across all relational databases.

- In practice, is a slightly different dialect of SQL for each every relational database .

note

This books focuses on SQL concepts that apply to all relational databases, but technically, the examples use the PostgreSQL dialect.

ACID Transactions

transaction : a set of coherent operations that should be performed as a unit

In relational databases, transactions must meet the following four properties:

Property Description Note Atomicity Either all the operations in the transaction happen, or none of them do. Partial successes or partial failures are not allowed. Consistency The operations always leave the data in a state that is valid Valid state is a state that according to all the rules and constraints you’ve defined in the database. Isolation Even though many transactions may be happening concurrently, the database should end up in the exact same state As if the transactions had happened sequentially (in any orders). Durability Once a transaction has completed, it is recorded to persistent storage (typically, to a hard drive) It isn’t lost, even in the case of a system failure. -

These 4 properties form the acronym ACID, which is one of the defining property of a relational database.

e.g.

-

Deduct $100 from every customer (transaction across single statement)

UPDATE customers SET balance = balance - 100;For a relational database, this statement will be execute to all customers in a single ACID transaction:

- either the transaction will complete successfully, and all customers will end up with $100 less,

- or no customers will be affected at all.

[!TIP] For a data store that doesn’t support ACID transactions:

- It would be possible for those data stores to crash part way through this transaction

- The data end up with some customers with $100 less and some unaffected (No atomicity)

-

Transfer $100 from customer 1 to customer 2 (transaction across multiple statements)

START TRANSACTION; UPDATE customers SET balance = balance - 100 WHERE id = 1; UPDATE customers SET balance = balance + 100 WHERE id = 2; COMMIT;For a relational database, all the statements between

START TRANSACTIONandCOMMITwill execute as a single ACID transaction, ensuring that:- one account has the balance decreased by $100, and the other increased by $100

- or neither account will be affected at all.

[!TIP] For a data store that doesn’t support ACID transactions, the data could end up in an in-between state that is inconsistent:

e.g.

- The first statement completes, subtracting $100.

- Then the data store crashes before the second statement runs, and as a result, the $100 simply vanishes into thin air (No atomicity)

Schemas and Constraints

note

Relational databases require you to define a schema for each table before you can read and write data to that table.

Defining a schema

To define a schema, you use CREATE TABLE statement

CREATE TABLE <table> (

<column_name> <column_type>,

<...>

);

e.g.

-

Create a table called

customerswith columns calledid,name,date_of_birth, andbalanceCREATE TABLE customers ( id SERIAL PRIMARY KEY, name VARCHAR(128), date_of_birth DATE, balance INT );

Schema’s integrity constraints

The schema includes a number of integrity constraints to enforce business rules:

-

Domain constraints:

Domain constraints limit what kind of data you can store in the table.

e.g.

-

Each column has a type, such as

INT,VARCHAR, andDATE, so the database will prevent you from inserting data of the wrong type -

The

idcolumn specifiesSERIAL, which is a pseudo type (an alias) that gives you a convenient way to capture three domain constraints:

-

-

Key constraints

A primary key is a column or set of columns that can be used to uniquely identify each row in a table

e.g.

- The

idcolumn specifiesPRIMARY KEY, which means this column is the primary key for the table, so the database will ensure that every row has a different value for this column.

- The

-

Foreign key constraints

A foreign key constraint is where a column in one table can contain values that are references to a column in another table.

e.g. Bank customers could have more than one account, each with their own balance,

-

Instead of having a single

balancecolumn in thecustomerstable -

You could create a second table called

accounts, where each row represents one accountCREATE TABLE accounts ( account_id SERIAL PRIMARY KEY, (1) account_type VARCHAR(20), (2) balance INT, (3) customer_id INT REFERENCES customers(id) (4) );The

accountstable has 4 columns:-

1: A unique ID for each account (the primary key).

-

2: The

account_type: e.g., checking or savings. -

3: The

balancefor the account. -

4: The ID of the customer that owns this account.

[!NOTE] The

REFERENCESkeyword labels this column as a foreign key into theidcolumn of thecustomerstable.- This will prevent you from accidentally inserting a row into the

accountstable that has an invalid customer ID (i.e., one that isn’t in thecustomerstable).

- This will prevent you from accidentally inserting a row into the

-

[!TIP] Foreign key constraint

-

is one of the defining characteristics of relational databases, as they

- allow you to define and enforce relationships between tables.

👉 This is what the “relational” in “relational database” refers to.

-

is critical in maintaining the referential integrity of your data

👉 another major reason to use a relational database as your primary source of truth.

-

[!IMPORTANT] Key takeaway #3 Use relational databases as your primary data store (the source of truth), as

they are

- reliable

- secure

- mature

they support

- schemas

- integrity constraints

- foreign key relationships

- joins

- ACID transactions

- and a flexible query language (SQL).

Schema modifications and migrations

To modify the schema for existing tables, you can use ALTER TABLE

warning

You should be careful when modifying a schema, or you will run into backward compatibility issues.

When use have a lot of modification to the schema, you can:

-

Manage the schemas manually

- Connecting directly to the database

- Executing

CREATE TABLE,ALTER TABLEby hand

-

Manage the schemas as code using a schema migration tool, such as Flyway, Liquibase, Atlas, Bytebase, Alembic, migrate, Squitch, ActiveRecord, Sequel, Knex.js, GORM.

When using a schema migration tool:

-

You define

-

your initial schemas

-

all the modifications as code, in an ordered series of migration files that you check into version control.

e.g.

-

Flyway uses standard SQL in

.sqlfilesv1_create_customers.sql v2_create_accounts.sql v3_update_customers.sql -

Knex.js uses a JavaScript DSL in

.jsfiles20240825_create_customers.js 20240827_create_accounts.js 20240905_update_customers.js

-

-

-

You apply these migration files using the schema migration tool, which keeps track of

- which of your migration files have already been applied, and

- which haven’t

so no matter

- what state your database is in, or

- how many times you run the migration tool,

you can be confident your database will end up with the desired schema.

As you make changes to your app, new versions of the app code will rely on new versions of your database schema.

To ensure these versions are automatically deployed to each environment, you will need to integrate the schema migration tool into your CI/CD pipeline

The schema migration tools can be run:

-

As part of app’s boot code

Advantages:

-

This will works in any environments:

- shared environments, e.g.

dev,stage,prod - or any developer’s local environment

- shared environments, e.g.

-

The migration are constantly being tested.

Disadvantages:

-

The migrations sometimes take a long time, which cause the app boot slowly, which might be a big problem:

-

some orchestration tools may redeploy the app before the migration can finish.

-

for serverless apps because of the cold starts.

-

-

-

As a separate strep in deployment pipeline, just before you deploy the app

Example: PostgreSQL, Lambda, and Schema Migrations

In this example, you’ll

- Deploy PostgreSQL in AWS using RDS13.

- Define the schema for this database as code using Knex.js

- Deploy a Lambda function and API Gateway to run a Node.js serverless web app that

- uses Knex.js to connect to the PostgreSQL database over TLS

- run queries

- return the results as JSON

Create an OpenTofu root module for PostgreSQL, Lambda, API Gateway

Use the rds-postgres OpenTofu module to deploy PostgreSQL on RDS:

-

Create the folder

cd examples mkdir -p ch9/tofu/live/lambda-rds cd ch9/tofu/live/lambda-rds -

The root module

main.tffor deploying Postgres on RDS# examples/ch9/tofu/live/lambda-rds/main.tf provider "aws" { region = "us-east-2" } module "rds_postgres" { source = "github.com/brikis98/devops-book//ch9/tofu/modules/rds-postgres" name = "bank" # (1) instance_class = "db.t4g.micro" # (2) allocated_storage = 20 # (3) username = var.username # (4) password = var.password # (5) }- 1: Set the name of the RDS instance, and the logical database within it, to

bank - 2: Use a

db.t4g.microRDS instance (2 CPUs and 1GB of memory, is part of the AWS free tier) - 3: Allocate 20 GB of disk space for the DB instance.

- 4: Set the username for the master database user to

var.username(an input variable). - 5: Set the password for the master database user to

var.password(an input variable).

- 1: Set the name of the RDS instance, and the logical database within it, to

-

Add input variables for the username/password of the database

# examples/ch9/tofu/live/lambda-rds/variables.tf variable "username" { description = "Username for master DB user." type = string } variable "password" { description = "Password for master DB user." type = string sensitive = true }

Use lambda and api-gateway modules to deploy a Lambda function and an API Gateway

-

The

main.tffor deploying a Lambda Function and API Gateway:# examples/ch9/tofu/live/lambda-rds/main.tf module "app" { source = "github.com/brikis98/devops-book//ch3/tofu/modules/lambda" name = "lambda-rds-app" src_dir = "${path.module}/src" # (1) handler = "app.handler" runtime = "nodejs20.x" memory_size = 128 timeout = 5 environment_variables = { # (2) NODE_ENV = "production" DB_NAME = module.rds_postgres.db_name DB_HOST = module.rds_postgres.hostname DB_PORT = module.rds_postgres.port DB_USERNAME = var.username DB_PASSWORD = var.password } } module "app_gateway" { source = "github.com/brikis98/devops-book//ch3/tofu/modules/api-gateway" name = "lambda-rds-app" # (3) function_arn = module.app.function_arn api_gateway_routes = ["GET /"] }- 1: The source code for the function will be in the

srcfolder. You’ll see what this code looks like shortly. - 2: Use environment variables to pass the Lambda function all the details about the database, including the database name, hostname, port, username, and password.

- 3: Create an API Gateway so you can trigger the Lambda function using HTTP requests.

- 1: The source code for the function will be in the

-

Add output variables for API Gateway’s endpoint, and database’s name, host, port

output "app_endpoint" { description = "API Gateway endpoint for the app" value = module.app_gateway.api_endpoint } output "db_name" { description = "The name of the database" value = module.rds_postgres.db_name } output "db_host" { description = "The hostname of the database" value = module.rds_postgres.hostname } output "db_port" { description = "The port of the database" value = module.rds_postgres.port }

Create schema migrations with Knex.js

-

Create a folder for the schema migrations

mkdir -p src cd srcThe schema migrations is a Node package (Knex.js uses JavaScript).

-

Create a

package.json{ "name": "lambda-rds-example", "version": "0.0.1", "description": "Example app 'Fundamentals of DevOps and Software Delivery'", "author": "Yevgeniy Brikman", "license": "MIT" } -

Install dependencies

npm install knex --save # (1) npm install knex --global # (2) npm install pg --save # (3)- (1): Install Knex.js as a dependency, so it’s available to Lambda function.

- (2): Install Knex.js as a CLI tool.

- (3): Install

node-postgreslibrary that Knex.js use to talk to PostgreSQL.

-

When Knex.js apply schema migrations, it will connect to PostgreSQL over the network.

-

The connection to PostgreSQL database on RDS is encrypted using TLS.

- Because the PostgreSQL database is internal, AWS use its root CA certificate to sign the TLS certificate.

-

To validate the database’s TLS certificate, you need to:

-

Download the root CA certificate14 that is used to sign the database TLS certificate

curl https://truststore.pki.rds.amazonaws.com/us-east-1/us-east-1-bundle.pem -o src/rds-us-east-2-ca-cert.pem -

Configure your app to trust the root CA certificate

// examples/ch9/tofu/live/lambda-rds/src/knexfile.js const fs = require("fs").promises; module.exports = { // (1) client: "postgresql", connection: async () => { // (2) const rdsCaCert = await fs.readFile("rds-us-east-2-ca-cert.pem"); // (3) return { database: process.env.DB_NAME, host: process.env.DB_HOST, port: process.env.DB_PORT, user: process.env.DB_USERNAME || process.env.TF_VAR_username, password: process.env.DB_PASSWORD || process.env.TF_VAR_password, ssl: { rejectUnauthorized: true, ca: rdsCaCert.toString() }, }; }, };-

(1): Use the PostgreSQL library (

node-postgres) to talk to the database. -

(2): Read the root CA certificate from AWS.

-

(3): This JSON object configures the connection to

- use the database name, host, port, username, and password from the environment variables you passed to the Lambda function in the OpenTofu code,

- validate the TLS certificate using the CA cert you read in (2).

[!TIP] You’re using the same environment variables to pass the username and password to both the OpenTofu module and to Knex.js.

-

-

-

Create your first schema migration

knex migrate:make create_customers_tablesThis will create

- a

migrationsfolder, and within it,- a file called

<TIMESTAMP>_create_customers_table.js, whereTIMESTAMPis a timestamp representing when you ran theknex migrate:makecommand.

- a file called

- a

-

Define the schema migration for the

customerstable// <TIMESTAMP>_create_customers_table.js // (1) exports.up = async (knex) => { // (2) await knex.schema.createTable("customers", (table) => { table.increments("id").primary(); table.string("name", 128); table.date("date_of_birth"); table.integer("balance"); }); // (3) return knex("customers").insert([ { name: "Brian Kim", date_of_birth: "1948-09-23", balance: 1500 }, { name: "Karen Johnson", date_of_birth: "1989-11-18", balance: 4853 }, { name: "Wade Feinstein", date_of_birth: "1965-02-25", balance: 2150 }, ]); }; // (4) exports.down = async (knex) => { return knex.schema.dropTable("customers"); };

With Knex.js, you define your schemas, and any updates to them, in sequential .js files as follows:

-

(1): Within each

.jsfile, theupfunction is where you define how to update the database schema. -

(2): This code creates the

customerstable with the exact same schema you first saw in Defining a schema, except- instead of using raw SQL (

CREATE TABLE), you use a JavaScript API (createTable()).

- instead of using raw SQL (

-

(3): This code populates the database with some initial data, adding the exact same three customers to the

customerstable that you initially saw in Writing and Reading, again- using a fluent JavaScript API instead of raw SQL.

-

(4): Within each

.jsfile, thedownfunction is where you define how to undo the schema changes in theupfile.-

This gives you a way to roll back changes in case of bugs, outages, or as part of testing.

-

The code here deletes the

customertable that was created in theupfunction.

-

Create the Lambda function that query PostgreSQL

The Lambda function will

- uses Knex.js to connect to the PostgreSQL database over TLS

- run queries

- return the results as JSON

-

Create

app.js- the entrypoint of the Lambda functionconst knex = require("knex"); const knexConfig = require("./knexfile.js"); // (1) const knexClient = knex(knexConfig); // (2) exports.handler = async (event, context) => { const result = await knexClient("customers") // (3) .select() .where("date_of_birth", ">", "1950-12-31"); // (4) return { statusCode: 200, headers: { "Content-Type": "application/json" }, body: JSON.stringify({ result }), }; };[!TIP] Knex.js can also be used to query the database

- (1): Load the database connection configuration from knexfile.js.

- (2): Create a Knex.js client, using the configuration from (1) to connect it to the PostgreSQL database.

- (3): Use the Knex.js client to perform the exact database query you saw in Writing and Reading data, which fetches all customers born after 1950.

- (4): Return the results of the query as JSON.

Deploy the example

-

Set environment variables for username/password

export TF_VAR_username=<username> # FILL IN export TF_VAR_password=<password> # FILL IN[!TIP] Save these credentials in a password manager, such as 1Password

-

Initialize and apply the OpenTofu module

cd .. tofu init tofu apply -

When apply completes (which can take 5-10 minutes for RDS to be deployed), you should see the output variables:

app_endpoint = "https://765syuwsz2.execute-api.us-east-2.amazonaws.com" db_name = "bank" db_port = 5432 db_host = "bank.c8xxxxxx7qwb.us-east-2.rds.amazonaws.com"

After the PostgreSQL database is deployed, you use the Knex CLI to apply schema migrations.

-

Expose the database name, host, port to the Knex CLI (using environment variables)

export DB_NAME=bank export DB_PORT=5432 export DB_HOST=<db_host> # value of db_host output variable -

Apply the schema migrations

cd src knex migrate:latestBatch 1 run: 1 migrationsIf the migrations apply successfully, your database should be ready to use.

-

Verify that the app is working

curl https://<app_endpoint>{ "result":[ {"id":2,"name":"Karen Johnson","date_of_birth":"1989-11-18","balance":4853}, {"id":3,"name":"Wade Feinstein","date_of_birth":"1965-02-25","balance":2150} ] }

Get your hands dirty: Working with relational databases

-

In order to allow the Lambda function to access the PostgreSQL database, the

rds-postgresmodule makes the database accessible over the public Internet, from any IP, which is not a good security posture.Update the code to

-

The Lambda function is using the master user for the database, which means it has permissions to do anything.

Update the code to follow the principle of least privilege

- creating a more limited database user that only has the permissions the function needs, e.g., read access to one table

- passing the credentials of this new database user to the Lambda function.

-

Any secrets you pass into OpenTofu resources, such as the database master user password, are stored in OpenTofu state.

To ensure these secrets are stored securely,

-

Make sure to enable encryption for your OpenTofu state backend, as in Chap 5 - Example: Use S3 as a remote backend for OpenTofu state.

-

Alternatively, use a different approach to manage the password so it doesn’t end up in OpenTofu state at all, such as

-

Caching: Key-Value Stores and CDNs

Cache

What is cache

cache : a component that stores data so that future requests for that data can be served faster15

To achieve low latency, the cache is stored

- in the memory (instead of on on disk)

- in a format that optimized

- for rapid retrieval, e.g. hash table

- rather than flexible query mechanics, e.g. relational tables

Uses cases for cache

-

Slow queries

If queries to your data stores take a long time, you can cache the results for faster lookups.

-

Slow aggregates

Sometimes, individual queries are fast, but you have to issue many queries, and aggregating all of them takes a long time.

-

High load

If you have a lot of load on your primary data store, queries may become slow due to contention for limited resources (CPU, memory, etc).

Using a cache to offload many of the requests can reduce load on the primary data store, and make

- those requests faster

- all other requests faster, too

An simple version of cache

You can have a cache by using an in-memory hash table directly in your application code:

e.g.

-

A cache in JavaScript

const cache = {}; // (1) function query(key) { // (2) if (cache[key]) { return cache[key]; } const result = expensiveQuery(key); // (3) cache[key] = result; return result; }This is an example of cache-aside strategy16:

-

(1): The cache is a hashtable (aka map or object) that the app stores in memory.

-

(2): When you want to perform a query, the first thing you do is

- check if the data you want is already in the cache.

- If so, you return it immediately (without having to wait on an expensive query).

- check if the data you want is already in the cache.

-

(3): If the data isn’t in the cache, you

-

perform the expensive query

e.g. send a query to the primary data store

-

store the result in the cache (so future lookups are fast)

-

then return that result.

-

-

This cache - with cache-aside strategy - is a “simplified” cache because:

| Aspect | The problem | Note | |

|---|---|---|---|

| 1. Memory usage | The cache will grow indefinitely, which may cause your app to run out of memory. | You need a caching mechanism to evict data when the cache size is exceeded its limit | Can be solved with better code |

| 2. Concurrency | The code doesn’t handle multiple concurrent queries that all update the cache. | You may have to use synchronization primitives, e.g., locking | Can be solved with better code |

| 3. Cold starts | Every single time you redeploy the app, it will start with an empty cache, which may cause performance issues. | You need a way to store the cache to disk so it’s persistent | |

| 4. Cache invalidation17 | The code only handles read operations, but not write operations, so future queries may return stale data. | You need some way to update (when you write data) or invalidate that data in the cache |

note

For more complicated cases, the typical way to handle caching is by deploying a centralized data store that is dedicated to caching, e.g. key-value stores, CDNs.

With centralized data store:

-

You avoid cold starts

-

You have only a single to update when do cache invalidation

e.g.

- You might do write-through caching, where whenever you write to your primary data store, you also update the cache.

Key-Value Stores

What is key-value store

key-value store : data store that is optimized for extremely fast lookup by a key : ~ a distributed hash table : acts as a cache between your app servers & primary data store

How key-value store works

Requests with the corresponding keys that:

- are in the cache (aka a cache hit) will

- be returned extremely fast (without having to talk to the primary data store)

- aren’t in the cache (aka a cache miss) will

- go to the primary store

- be added to the cache (for future cache hits)

The API for most key-value stores primarily consists of just 2 type of functions:

- a function to insert a key-value pair

- a function to lookup a value by key

e.g.

-

With Redis, they’re

SETandGET$ SET key value OK $ GET key value

Key-value stores do not require you to define a schema ahead of time, so you can store any kind of value you want.

caution

Key-value store is sometimes referred to as schema-less, but this is a misnomer (as you see in Schema & constraints of document stores).

Typically, the values are either

- simple scalars, e.g., strings, integers…

- or blobs that contain arbitrary data that is opaque to the key-value store.

warning

Since key-value store is only aware of keys and very basic types of values, the functionality is typically limited compared to relational database.

[!IMPORTANT] Key takeaway #4 Use key-value stores to cache data, speeding up queries and reducing load on your primary data store.

Which key-value store solutions are in the market

You can:

-

self-host key value stores with Some of the major players in the key-value store space include Redis / Valkey 18, Memcached, Riak KV

-

or use a managed service Redis Cloud, Amazon ElastiCache, Amazon DynamoDB 19, Google Cloud Memorystore, Azure Cache for Redis, and Upstash.

After you have a key-value store deployed, many libraries can automatically use them for cache-aside and write-through caching without you having to implement those strategies manually.

e.g.

- WordPress has plugins that automatically integrate with Redis and Memcached

- Apollo GraphQL supports caching in Redis and Memcached

- Redis Smart Cache plugin can give you automatic caching for any database you access from Java code via the Java Database Connectivity (JDBC) API.

CDNs

What is CDN

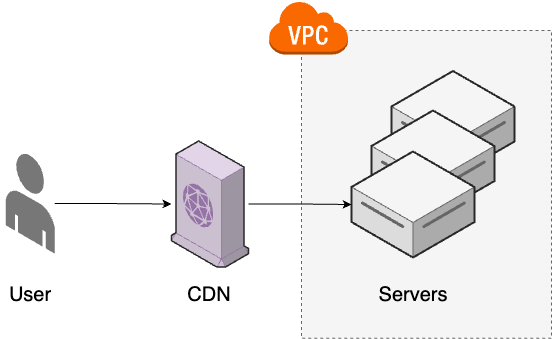

content delivery network (CDN) : a group of servers - called Points of Presence (PoPs) - that are distributed all over the world : - cache data from your origin servers, i.e. your app servers : - serve that data to your users from a PoP that is as close to that user as possible. : acts as a cache between your users & your app servers

How CDN works

When a user makes a request, it first goes to the CDN server that is closest to that user, and

- if the content is already cached, the user gets a response immediately.

- If the content isn’t already cached, the CDN forwards the request to your origin servers:

- fetches the content

- caches it (to make future requests fast)

- then returns a response

Why use CDN

-

Reduce latency

CDN servers are distributed all over the world

e.g.

- Akamai has more than 4,000 PoPs in over 130 countries

which:

- allows you to serve content from locations that are physically closer to your users, which can significantly reduce latency (See common latency numbers)

- without your company having to invest the time and resources to deploy and maintain app servers all over the world.

-

Reduce load

Once the CDN has cached a response for a given key, it no longer needs to

- send a request to the underlying app server for that key

- at least, not until the data in the cache has expired or been invalidated.

If you have a good cache hit ratio20, this can significantly reduce the load on the underlying app servers.

-

Improve security

Many CDNs these days can provide additional layers of security, such as

- a web application firewall (WAF), which can inspect and filter HTTP traffic to prevent certain types of attacks, e.g. SQL injection, cross-site scripting, cross-site forgery

- Distributed Denial-of-Service (DDoS) protection, which shields you from malicious attempts to overwhelm your servers with artificial traffic generated from servers around the world.

-

Other benefits

As CDNs become more advanced, they offer more and more features that let you take advantage of their massively distributed network of PoPs:

- edge-computing, where the CDN allows you to run small bits of code on the PoPs, as close to your users (as close to the “edge”) as possible

- compression, where the CDN automatically uses algorithms such as Gzip or Brotli to reduce the size of your static content and thereby, reduce bandwidth usage

- localization, where knowing which local PoP was used allows you to choose the language in which to serve content.

[!IMPORTANT] Key takeaway #5 Use CDNs to cache static content, reducing latency for your users and reducing load on your servers.

When to use CDN

You can use CDN to cache many types of contents from your app server:

- dynamic content: content that is different for each user and request

- static content: content that

- (a) is the same for all of your users, and

- (b) doesn’t change often.

But CDNs provides most value when be used to cache static content (static files):

- images, videos, binaries

- HTML, CSS, JavaScript

e.g.

- News publications can usually offload a huge portion of their traffic to CDNs, as once an article is published:

- every user sees the same content, and

- that content isn’t updated too often.

Which CDN to use

Some of the major players in the CDN space include

File Storage: File Servers and Object Stores

Why you shouldn’t store static files in a database

You can store static files (as a blob) in a database, which

-

may has some benefits:

- all data is kept in a single system where you already have security controls, data backups, monitoring…

-

but also has many disadvantages:

-

Slower database

Storing files in a database bloats the size of the database, which:

- makes the database itself slower

- makes the scalability & availability of the database worse (the database itself is already a bottleneck)

-

Slower & more expensive replicas & backups

The bigger the database the slower & more expensive to make replicas and backups.

-

Increased latency

Serving files from your database to a web browser requires you to

- proxy each file through an app server, which

- significantly increases latency

- compared to serving a file directly, e.g. via the

sendfilesyscall

- proxy each file through an app server, which

-

CPU, memory, and bandwidth overhead

Proxying files in a database through an app server

- increases bandwidth, CPU, and memory usage,

- both on the app server and the database (which makes the database even more of a bottleneck).

-

note

Instead of storing static files in a database, you typically store and serve them from dedicated file servers

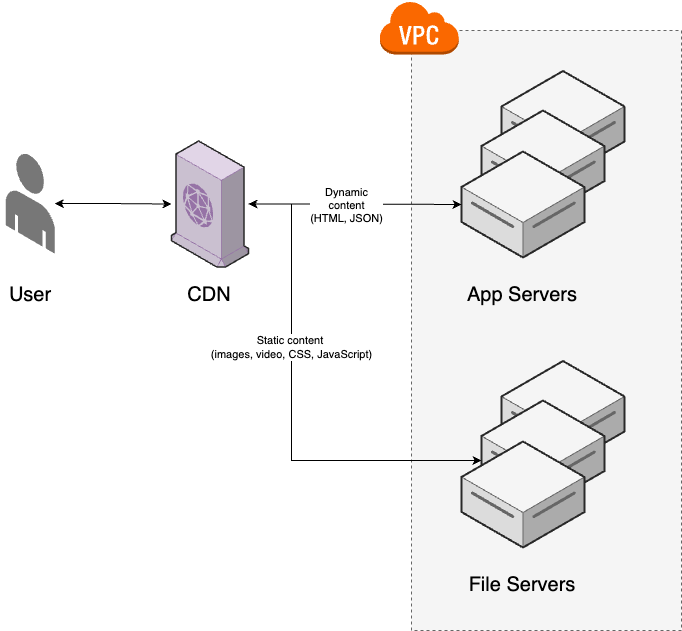

File Servers

What is a file server

file server : a server that is designed to store & serve static files (aka static content), such as images, videos, binaries, JavaScript, CSS

Why use file servers

By using dedicated file servers,

- all static content are handle by file servers.

This allows your app servers to focus entirely on

- serving dynamic content (content that is different for each user & request)

How to use file servers

Files servers are usually used together with CDNs and your app server.

Users requests first go to a CDN, which

- if it is already cached, returns a response immediately

- if not, the CDN uses

- file servers as origin servers for static content

- app servers as origin servers for dynamic content

Which file servers to use

Almost any web server software can be configured to serve files.

e.g. Apache, Nginx, HAProxy Varnish, Lighttpd, Caddy, Microsoft IIS.

The challenges when working with file servers

Serving files is straightforward; the hard part is handling

-

Storage

You need to provide sufficient hard drive capacity to store the files.

-

Metadata

In additional to the files, you need to store metadata related to the files, e.g. names21, owner, upload date…

You could store the metadata

- on the file system next to the files themselves, or

- in a separate data store (e.g., a relational database), which makes it easier to query the metadata 👈 more common approach

-

Security

You need to

- control who can can create files, read files, update files, and delete files.

- encrypt data at rest and in transit.

-

Scalability & availability

You could host all the files on a single server, but a single server is a single point of failure (as you know from Why use an orchestration | Chapter 3)

To support a lot of traffic, and to be resilient to outages, you typically need to figure out how to host files across multiple servers.

note

Instead of using file servers and solving all these problems yourself, which requires

- many custom toolings

- a lot of servers, hard drives…

You can offload these work to a 3rd-party object store.

Object Stores

What is Object Store

object store : aka blob store : a system designed to : - store opaque objects (blobs) : - often in the form of files with associated metadata. : ~ file server as a service (from cloud providers)

Which Object Store to use

The major players in this space are

Why use Object Store

-

Object stores provide out-of-the-box solutions to the challenges with file servers:

-

Storage

Object stores provide nearly unlimited disk space, usually for dirt-cheap prices.

e.g.

- Amazon S3 is around two cents per gigabyte per month, with a generous free tier.

-

Metadata

Most object stores allow you to associate metadata with each file you upload.

e.g.

- S3 allows you to configure both

- system-defined metadata (e.g., standard HTTP headers such as entity tag

- content type, as you’ll see later in this blog post)

- user-defined metadata (arbitrary key-value pairs).

- S3 allows you to configure both

-

Security

Most object stores offer fine-grained access controls and encryption.

e.g.

- S3 provides

- IAM for access control,

- TLS for encryption in transit

- AES (using a KMS) for encryption at rest.

- S3 provides

-

Scalability & availability

Object stores typically provide scalability and availability at a level few companies can hope to achieve.

e.g.

- S3 Standard provides

- unlimited scalability

- 99.99% availability

- 99.999999999% durability23.

- S3 Standard provides

-

-

Many object stores also provide many other useful features:

-

replication across data centers in different regions

-

search & analytics across all the files you store in the object store

e.g.

- Amazon Athena allows allows you to use SQL to query CSV, JSON, ORC, Avro, or Parquet files stored in S3

-

integration with compute to help automate workflows

e.g.

- you can have S3 automatically trigger a Lambda function each time you upload a file

-

automatic archival or deletion of older files (to save money)

These features is why even companies who otherwise keep everything on-prem often turn to the cloud and object stores for file storage.

[!IMPORTANT] Key takeaway #6 Use file servers and object stores to serve static content, allowing your app servers to focus on serving dynamic content.

Example: Serving Files With S3 and CloudFront

Create an S3 bucket configured for website hosting

note

The s3-website OpenTofu module

-

in sample code repo at

ch9/tofu/modules/s3-websitefolder -

will:

- creates an S3 bucket

- makes its contents publicly accessible

- configures it as a website, which means it can support

- redirects

- error pages

- accessing logging, and so on.

In this example, you will use the s3-website OpenTofu module to create an S3 bucket configured for website hosting

-

Create an folder for the root module

cd examples mkdir -p ch9/tofu/live/static-website cd ch9/tofu/live/static-website -

The

main.tfroot module# examples/ch9/tofu/live/static-website/main.tf provider "aws" { region = "us-east-2" } module "s3_bucket" { source = "github.com/brikis98/devops-book//ch9/tofu/modules/s3-website" # TODO: fill in your own bucket name! name = "fundamentals-of-devops-static-website" # (1) index_document = "index.html" # (2) }-

(1): The name to use for the S3 bucket.

[!NOTE] S3 bucket names must be globally unique, so you’ll have to fill in your own bucket name here.

-

(2): The suffix to use for directory requests.

- If you set this to

index.html, a request for the directory/foowill return the contents of/foo/index.html.

- If you set this to

-

-

Proxy the

s3_website_endpointfroms3_bucketto root module# examples/ch9/tofu/live/static-website/outputs.tf output "s3_website_endpoint" { description = "The endpoint for the website hosted in the S3 bucket" value = module.s3_bucket.website_endpoint }

Upload static content to the S3 bucket

-

Create a simple HTML page

-

Create the

contentfolder within thestatic-websitefolder:mkdir -p content -

Create 3 files in

contentfolder-

index.html<!-- examples/ch9/tofu/live/static-website/content/index.html --> <html lang="en"> <head> <title>Fundamentals of DevOps and Software Delivery</title> <link rel="stylesheet" href="styles.css" /> </head> <body> <h1>Hello, World!</h1> <p> This is a static website hosted on S3, with CloudFront as a CDN. </p> <img src="cover.png" alt="Fundamentals of DevOps and Software Delivery" /> </body> </html> -

style.css/* examples/ch9/tofu/live/static-website/content/style.css */ html { max-width: 70ch; margin: 3em auto; } h1, p { color: #1d1d1d; font-family: sans-serif; } -

cover.png(examples/ch9/tofu/live/static-website/content/cover.png)Copy any

pngimage to the content folder, and name itcover.png.

-

-

-

Update that HTML page to your S3 bucket (using OpenTofu

aws_s3_objectresource)-

Update the

main.tfto useaws_s3_objectresourceprovider "aws" { # ... } module "s3_bucket" { # ... } resource "aws_s3_object" "content" { for_each = { # (1) "index.html" = "text/html" "styles.css" = "text/css" "cover.png" = "image/png" } bucket = module.s3_bucket.bucket_name # (2) key = each.key # (3) source = "content/${each.key}" # (4) etag = filemd5("content/${each.key}") # (5) content_type = each.value # (6) cache_control = "public, max-age=300" # (7) }-

(1): Have the

aws_s3_objectresource loop over a map where- the key is a file to upload from the content folder

- the value is the content type for that file.

-

(2): Upload the files to the S3 bucket you created earlier.

-

(3): For each file, use the key in the map as its path within the S3 bucket.

-

(4): Read the contents of each file from the

contentfolder. -

(5): Set the entity tag (ETag)24 to the MD5 hash of each file’s contents.

- This is also used by OpenTofu to know when the file has changed, so it uploads a new version when you run

apply.

- This is also used by OpenTofu to know when the file has changed, so it uploads a new version when you run

-

(6): Set the content type25 for each file to the value in the map.

-

(7): Set the cache control26 value for each file to:

-

-

[!WARNING] Watch out for snakes: Don’t upload files to S3 using OpenTofu in production

Using the

aws_s3_objectresource to upload files to an S3 bucket is convenient for simple examples and learning, but don’t use it for production use-cases:

- If you have a large number of files, you may end up with performance and throttling issues with the

aws_s3_objectresource.- You typically want to put static content through an asset pipeline which provides functionality such as minification, fingerprinting, and compression, none of which you can do with OpenTofu.

note

In production, to upload files to S3, you should use either

-

an asset pipeline built into your web framework, or

e.g. Ruby on Rails Asset Pipeline with the asset_sync Gem

-

a library designed to efficiently sync images with S3

e.g. s3_website.

Deploy S3 bucket and static content to S3 bucket

-

Initialize and apply OpenTofu root module

tofu init tofu apply -

Verify that your website (hosted on S3) is up and running

Use a web browser to open

http://<s3_website_endpoint>[!NOTE] Websites hosted on AWS S3 only support HTTP.

To add HTTPS, you need to use AWS CloudFront.

Deploy CloudFront as a CDN in front of the S3 bucket

note

The OpenTofu module cloudfront-s3-website

- in sample code repo at

ch9/tofu/modules/cloudfront-s3-websitefolder - will

- create a globally-distributed CloudFront distribution

- configure your static website in S3 as an origin

- set up a domain name & TLS certificate

- plugs in some basic caching settings

In this example, you will use the OpenTofu module cloudfront-s3-website to deploy CloudFront as a CDN in front of the S3 bucket:

-

Update

main.tfto usecloudfront-s3-websitemoduleprovider "aws" { # ... } module "s3_bucket" { # ... } resource "aws_s3_object" "content" { # ... } module "cloudfront" { source = "github.com/brikis98/devops-book//ch9/tofu/modules/cloudfront-s3-website" bucket_name = module.s3_bucket.bucket_name # (1) bucket_website_endpoint = module.s3_bucket.website_endpoint # (2) min_ttl = 0 # (3) max_ttl = 300 default_ttl = 0 default_root_object = "index.html" # (4) }-

(1): Pass in the S3 bucket name, which is mostly used as the unique ID within the CloudFront distribution.

-

(2): Pass in the S3 bucket website endpoint.

- CloudFront will use this as the origin, sending requests to it for any content that isn’t already cached.

-

(3): Configure the time-to-live (TTL) settings for the cache, which specifies the minimum, maximum, and default amount of time, in seconds, that objects are allowed to

- remain in the CloudFront cache

- before CloudFront

- sends a new request to the origin server

- to check if the object has been updated.

The preceding code configures CloudFront to

- rely on the response headers (e.g., the cache control header) for caching instructions,

- but never caching content for more than 5 minutes.

This is a convenient setting for testing, as it ensures you don’t have to wait more than 5 minutes to see the latest version of your content.

-

(4): Configure CloudFront to

- return the contents of

index.html - whenever someone makes a request to the root of your CloudFront distribution’s domain name.

- return the contents of

-

-

Add CloudFront distribution domain name as an output variable

# examples/ch9/tofu/live/static-website/outputs.tf output "cloudfront_domain_name" { description = "The domain name of the CloudFront distribution" value = module.cloudfront.domain_name }

-

Re-apply OpenTofu module

tofu apply[!TIP] CloudFront distribution can take 2-10 minutes to deploy.

-

Verify you can access the website via HTTPS at

https://<cloudfront_domain_name>

Get your hands dirty: S3 and CloudFront

-

Update the code to configure CloudFront to use a custom domain name and TLS certificate.

You could

- use

static.<YOUR-DOMAIN>as the domain name, where<YOUR-DOMAIN>is the domain name you registered in Route 53 in Chapter 7 - use AWS Certificate Manager (ACM) to provision a free, automatically-renewing certificate for this domain

- use

-

The

s3-websitemodule makes the S3 bucket publicly accessible.However, as you have a CDN in front of the S3 bucket, you can update the code to only allow the contents of the S3 bucket to be accessed via CloudFront.

Semi-Structured Data and Search: Document Stores

What is Semi-Structured Data

When you need to dealing with:

-

user-generated data with unpredictable structure, that you can’t pre-define schema

-

search across those user-generated data, including full-text search, fuzzy search, faceted search…

[!NOTE] Those data that

- does not obey the tabular structure of data models associated with relational databases or other forms of data tables,

- but nonetheless contains tags or other markers to separate semantic elements and enforce hierarchies of records and fields within the data.

is known as semi-structured data

you

-

can’t use relational database, which only works well when the data

- has clear, consistent, predictable structure

- can be stored in tables with well-defined schemas

-

need to use a document store

What is Document Store

document store : similar to a key-value store, except that values are : - richer data structures called documents : - understood, process by the document store

Which Document Store to use

There are 2 type of document stores:

- General-purpose document store: MongoDB, CouchDB, Couchbase, Google Firestore.

- Search-optimized29 document store:

Working with Document Store

Reading and Writing Data (Document Store)

To understand how to read and writing data to a document store, let’s use MongoDB as an example:

-

MongoDB allows you to store JSON documents in collections.

[!TIP] It’s similar to how a relational database allows you to store rows in tables.

-

MongoDB does NOT require you to

- define a schema for your documents.

[!TIP] With MongoDB, you can store JSON data in any format you want.

-

To read and write data, you use the MongoDB Query Language (MQL), which is similar to JavaScript.

e.g.

-

To write a JSON document into the

bankcollection, you can use theinsertOnefunction:db.bank.insertOne({ name: "Brian Kim", date_of_birth: new Date("1948-09-23"), balance: 1500, }); -

To write two JSON documents into the

bankcollection, you use theinsertManyfunction:db.bank.insertMany([ { name: "Karen Johnson", date_of_birth: new Date("1989-11-18"), balance: 4853, }, { name: "Wade Feinstein", date_of_birth: new Date("1965-02-25"), balance: 2150, }, ]); -

To read all data back from the

bankcollection, you can use thefindfunction (without any arguments)db.bank.find();[ { _id: ObjectId("66e02de6107a0497244ec05e"), name: "Brian Kim", date_of_birth: ISODate("1948-09-23T00:00:00.000Z"), balance: 1500, }, { _id: ObjectId("66e02de6107a0497244ec05f"), name: "Karen Johnson", date_of_birth: ISODate("1989-11-18T00:00:00.000Z"), balance: 4853, }, { _id: ObjectId("66e02de6107a0497244ec060"), name: "Wade Feinstein", date_of_birth: ISODate("1965-02-25T00:00:00.000Z"), balance: 2150, }, ];[!NOTE] You get back the exact documents you inserted, except for one new field:

_id.The

_idfield - added to every document by MongoDB - is used as- a unique identifier

- a key for lookups (similar to a key-value store).

-

To look up a document by ID, you also use

findfunction:db.bank.find({ _id: ObjectId("66e02de6107a0497244ec05e") });{ _id: ObjectId('66e02de6107a0497244ec05e'), name: 'Brian Kim', date_of_birth: ISODate('1948-09-23T00:00:00.000Z'), balance: 1500 }

[!NOTE] For both of key-value store and document store, you get the “value” by looking up a “key”.

The key different between key-value stores and document stores is:

- Key-value stores treat values as opaque

- Document stores treat values as transparent values, which is fully understood and processed.

Key-value store Document store “key” key set by you “key” set by document store “value” opaque value (simple scalars or blobs) transparent value

-

-

Compare to a key-value store, with MongoDB, you can look up values with richer query functionality:

e.g.

-

To look up customers born after 1950, you also use

findfunctiondb.bank.find({ date_of_birth: { $gt: new Date("1950-12-31") } });[ { _id: ObjectId("66e02de6107a0497244ec05f"), name: "Karen Johnson", date_of_birth: ISODate("1989-11-18T00:00:00.000Z"), balance: 4853, }, { _id: ObjectId("66e02de6107a0497244ec060"), name: "Wade Feinstein", date_of_birth: ISODate("1965-02-25T00:00:00.000Z"), balance: 2150, }, ]; -

To deduct $100 from all customers, you use

updateManyfunctiondb.bank.updateMany( {}, // (1) { $inc: { balance: -100 } }, // (2) );-

(1): The first argument is a filter to narrow down which documents to update.

- In this case, it’s an empty object, which doesn’t have any filter effect.

-

(2): The second argument is the update operation to perform.

- In this case, the update operation uses the

$incoperator to- increment all balances by -100,

- thereby deducting $100 from all customers.

- In this case, the update operation uses the

-

-

warning

Document stores

-

offers richer querying and update functionality (compare to a key-value store)

-

but has 2 major limitations, that is (for most document stores)

-

Do not support working with multiple collections, which means

- there is no support for joins31.

-

Don’t support ACID transactions.

-

ACID Transactions (Document Store)

Most document stores don’t support ACID transactions32.

-

You might get atomicity for updates on a single document.

e.g.

- When you update one document with

updateOnefunction

- When you update one document with

-

But you rarely get atomicity for updates to multiple documents.

e.g.

- If MongoDB crashes in the middle of the updateMany operation, the code might deduct $100 from some customers but not others.

warning

Again, be aware that most document stores don’t support ACID transactions.

Schemas and Constraints (Document Store)

Most document stores do NOT require you to

- define a schema or constraints up front.

This is sometimes referred to as schemaless33, but that’s a bit of a misnomer.

There is always a schema.

-

The more accurate term is schema-on-read34, in which

- the structure of the data (the schema) is implicit 👈 (implicit schema)

- the data only interpret the schema when the data is read 👈 schema-on-read

-

In contrast to schema-on-write - the traditional approach of relational databases, where

- the schema is explicit 👈 (explicit schema)

- the database ensures all data conforms to it when the data is written 👈 (schema-on-write)

[!TIP] Database’s schema is similar to type checking of programming language

- Schema-on-write ~ compile-time type checking

- Schema-on-read ~ dynamic (run-time) type checking

e.g.

-

To parse data from the

bankcollection in the previous section, you might use the following Java code:public class Customer { private String name; private int balance; private Date dateOfBirth; }This Java class defines the schema and constraint of the data:

- Which field should be in the data?

- Which data type of each field?

In other words, it’s the schema-on-read:

- Either the data matches the

Customerdata structure - Or you will get an error.

With schema-on-read, the data store don’t need to ensure the data to any structure while writing, so

- you can insert & store any data in the data store.

e.g.

-

You can insert a document with a subtle “error” into the

bankcollectiondb.bank.insertOne({ name: "Jon Smith", birth_date: new Date("1991-04-04"), // (1) balance: 500, });- MongoDB will let you insert this data without any complaints.

- But when you try to parse this data with the

Customerclass, you may get an error.

warning

With document stores, you can insert any data without any constraints (as of relational databases), so you may end up with a lot of errors:

e.g.

- Without domain constraints, you might have:

- typos in field names

- null/empty values for required fields

- incorrect types of fields…

- Without foreign key constraints, yo might:

- reference non-existent documents in other collections.

tip

Those errors with document stores can be prevented if you use a relational database.

note

Only use document stores (schema-on-read) when

-

you need to dealing with semi-structured, non-uniform data, e.g.

- user-generated documents

- event-tracking data

- log messages

- in case - for some reason - not all the items in the collections have the same structure.

-

the schema changes often35, or

-

you can sacrifice some part of writing performance.

[!IMPORTANT] Key takeaway #7 Use document stores

- for semi-structured and non-uniform data, where you can’t define a schema ahead of time,

- or for search, when you need full-text search, faceted search, etc.

Analytics: Columnar Databases

Columnar Database Basics

What is columnar database

columnar databases : Aka column-oriented databases : Databases used in online analytic processing (OLAP) system : Look similar to relational databases: : - store data in tables that consist of rows and columns, : - they usually have you define a schema ahead of time, and : - sometimes, they support a query language that looks similar to SQL. : However, there are a few major differences: : - Most columnar databases do not support ACID transactions, joins, foreign key constraints, and many other relational database’s key features. : - They are are column-oriented to optimize for operations across columns

tip

Relational databases are typically row-oriented, which means they are optimized for operations across rows of data.

How columnar database works

How databases store data

The serialized data may be stored different depending on the type of database.

e.g. A books table

| id | title | genre | year_published |

|---|---|---|---|

| 1 | Clean Code | Tech | 2008 |

| 2 | Code Complete | Tech | 1993 |

| 3 | The Giver | Sci-fi | 1993 |

| 4 | World War Z | Sci-fi | 2006 |

-

In a row-oriented relational database,

-

the serialized data may look like this:

[1] Clean Code,tech,2008 [2] Code Complete,tech,1993 [3] The Giver,sci-fi,1993 [4] World War Z,sci-fi,2006The values in each row will be kept together

-

-

In a column-oriented database,

-

the serialized data of the same data may look like this:

[title] Clean Code:1,Code Complete:2,The Giver:3,World War Z:4 [genre] tech:1,2,sci-fi:3,4 [year_published] 2008:1,1993:2,3,2006:4All the values in a single column are laid out sequentially, with

- the column values as keys, e.g.

1993 - the IDs as values, e.g.

2,3

- the column values as keys, e.g.

-

How databases query data

For previous books collections,

-

To look up all the books published in 1993, you can use the following query:

SELECT * FROM books WHERE year_published = 1993;id | title | genre | year_published ----+---------------+--------+---------------- 2 | Code Complete | tech | 1993 3 | The Giver | sci-fi | 1993[!NOTE] This query use

SELECT *, which - without indices - will read:- the

year_publishedcolumn of all rows 👉 to find the matching rows - every single column of any matching rows 👉 to return the data

Under the hood, there is a different in how the data is read:

-

With row-oriented storage:

- The data for each column (of a row) is laid out sequentially on the hard drive

👉 Since sequential reads is faster, row-oriented storage will be faster (for this type of query)

-

With column-oriented storage:

- the data for each column (of a row) is scattered across the hard drive

👉 Since random reads is slower, column-oriented storage will be slower (for this type of query)

- the

-

To compute an aggregation, for example, the number of books published in 1993, you use the following query:

SELECT COUNT(*) FROM books WHERE year_published = 1993;count ------- 2[!NOTE] This query use

COUNT(*), which will read:- only the

year_publishedcolumn of all rows to find the match rows

-

With row-oriented storage:

- The data for each column (of a row) is laid out sequentially on the hard drive, but each row is scattered across the hard drive

👉 This requires jumping all over the hard drive to read the

year_publishedvalue for each row, so row-oriented storage will be slower (for this type of query). -

With column-oriented storage:

- All the data for

year_publishedcolumn is laid out sequentially.

👉 Since sequentially reads is faster, column-oriented storage will be faster (for this type of query).

- All the data for

[!TIP] When you’re doing analytics, aggregate functions such as

COUNT,SUM,AVGcome up all the time, so the column-oriented approach is used in a large number of analytics use cases - only the

Analytics Use Cases

The analytics space is massive, this book only list a few of the most common categories of tools:

| Analytics Uses Cases | Description | Popular tools |

|---|---|---|

| General-purpose columnar databases | Data stores used for a wide variety of use cases | Cassandra, Google Bigtable, HBase, Kudu |

| Time-series databases | Data stores designed for storing & analyzing time-series data36 (e.g. metrics, logs) | InfluxDB, Amazon Timestream, Prometheus, Riak TS, Timescale, Honeycomb |

| Big data systems | Systems designed to process big data37 | - MapReduce model38 / Hadoop 39, Cloudera - Amazon EMR 40, Google Dataproc, Azure HDInsight |

| Fast data systems | Systems designed to do stream processing41 | - Apache’s Spark, Flink, Storm, Samza, Beam, Druid, Pinot - Amazon Data Firehose |

| Data warehouses | A data warehouse is a central repository42 where you integrate data from all of your other systems43. | - Snowflake - Amazon Redshift, Google BigQuery, Azure Synapse Analytics - Apache Hive, Oracle Enterprise Data Warehouse, Teradata, Informatica, Vertica |

[!IMPORTANT] Key takeaway #8 Use columnar databases for

- time-series data

- big data

- fast data

- data warehouses

- and anywhere else you need to quickly perform aggregate operations on columns.

tip

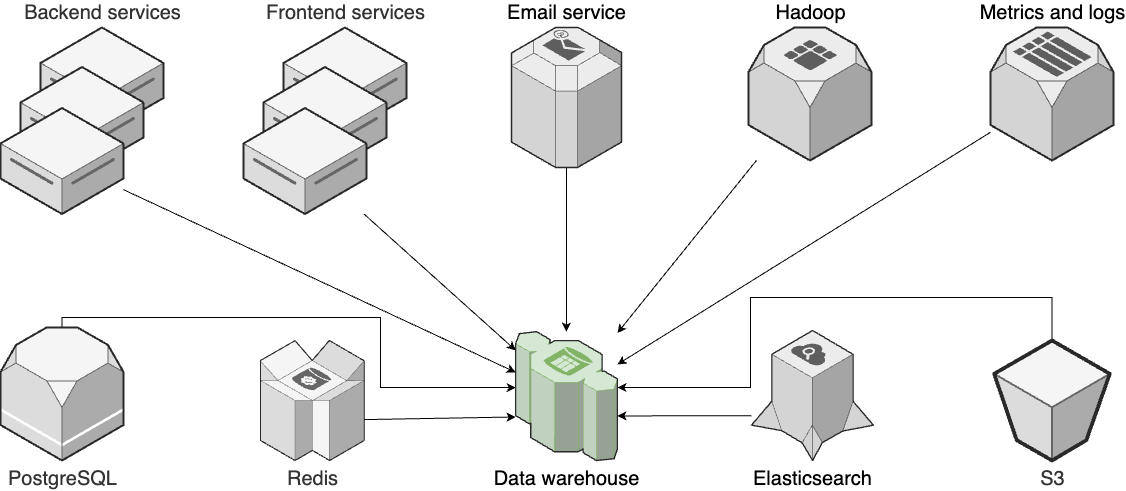

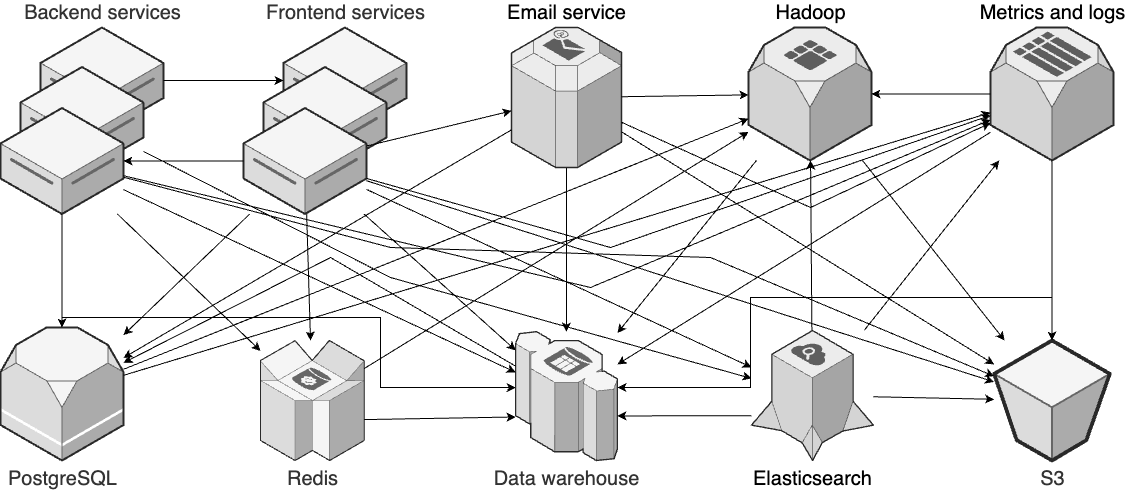

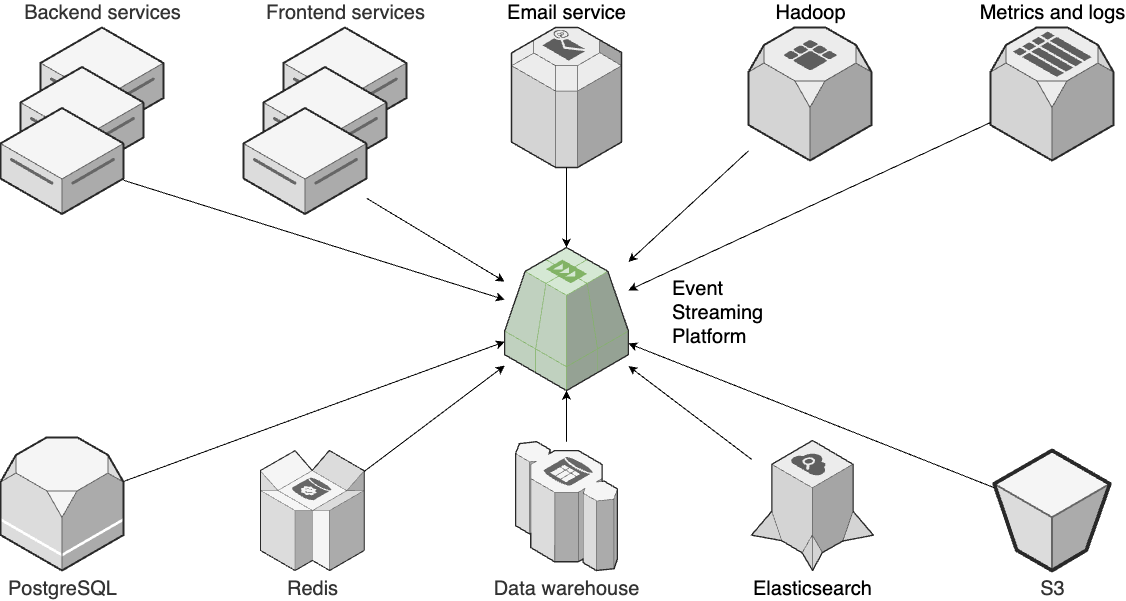

A data warehouse looks like this

.>

.>

It looks simple, but in fact, it’s a lot more complicated:

-

each arrow from the various systems to the data warehouse are actually complicated background process known as extract, transform, and load (ETL), where you

- use specialized software, e.g.

- to

- extract data from one system that uses one format,

- transform it into the format used by another system (cleaning up and standardizing the data along the way),

- then load it into that other system

-

there are

- not only arrows from each system to the data warehouse

- but also arrows between these systems, too, which now representing background jobs, event-based communication… 👈 aka asynchronous processing

Asynchronous Processing: Queues and Streams

In chap 7, you’ve learned that with microservices,

-

you need to figure out service discovery, so your services can know which endpoint they use talk to another service.

-

these microservices are interacting synchronously.

e.g. When service A needs to talk to service B

- 1: Service A figure out the endpoint of service B by using service discovery (or service mesh).

- 2: Using that endpoint, service A

- 2.1: sends an request to service B

- 2.2: 👈 Service B process the request immediately

- 2.3: wait for service B to response

[!WARNING] If

- service A can’t figure the endpoint of service B, or

- service B doesn’t response

then it’s a fail request.

In chap 6, you’ve also known that there are other ways to breakup codebase into services, one of them is event-driven architecture, which use a different approach for communication - the services interacts asynchronously (instead of synchronously).

e.g.

-

A simple version of asynchronously communication look like this:

When service A needs to talk to service B:

- 1: Service A figure out the endpoint of service B by using service discovery (or service mesh).

- 2: Service A sends a asynchronous messages to service B, then move on (without waiting for response)

- 3: Service B can process that message at its own packet.

- 4: If a response is needed, service B send a new asynchronous message to service.

[!WARNING] In this simple version, service B could:

- have a bug 👉 process a messages multiple times

- out-of-memory or crash 👉 lost all messages

Both ways would cause negative consequence for your business.

-

To ensures each messages is (eventually) processed only once:

- You don’t typically just

- send the messages from service A directly to service B

- have service B hold the messages on its memory, which could:

- used up on the memory of service B

- cause a losing of all unprocessed messages (if service B crash)

- Instead,

- service A sends messages to

- service B reads messages from

a shared data store designed to facilitate asynchronous communication by:

- (1) persisting messages to disk 👈 no more lost messages

- (2) tracking the state of those messages 👈 no more processing a messages more than once…

- You don’t typically just

There are 2 type of data store that can do this:

- Message queues

- Event streams

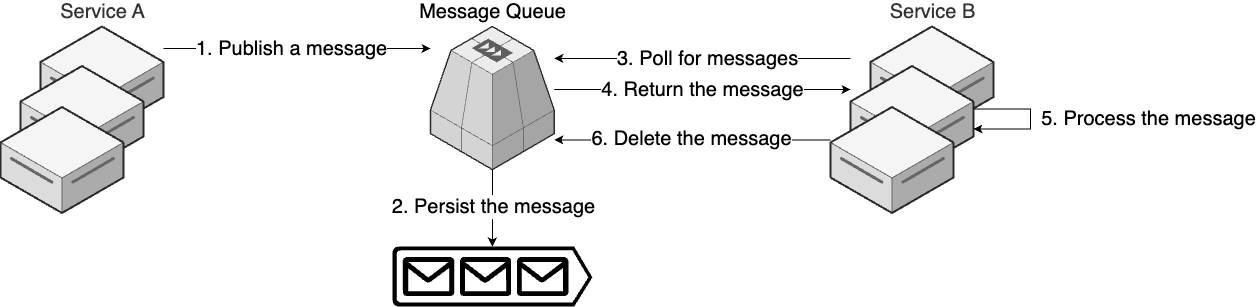

Message Queues

What is Message Queue

message queue : a data store that can be used for asynchronous communication between: : - producers, who write messages to the queue, : - consumers, who read messages from the queue

note

Many producers and consumers can use the queue, but

- each message is processed only once, by a single consumer.

For this reason, this messaging pattern is often called one-to-one, or point-to-point, communications.

Which Message Queue to use

Some of the most popular message queues are:

How Message Queue Works

The typical process of using a queue is:

-

A producer, such as service A, publishes a message to the queue.

-

The queue persists the message to disk.

[!NOTE] This ensures the message will eventually be processed, even if the queue or either service has an outage.

-

A consumer, such as service B, periodically polls the queue to see if there are new messages.

-

When there is a new message, the queue returns the message to service B.

[!NOTE] The queue may record that the message is “in progress” so that no other consumer can read the message at the same time.

-

Service B processes the message.

-

Once the message has been successfully processed, service B deletes the message from the queue.

[!NOTE] This ensures that the message is only processed one time.

When to use Message Queues

Queues are most often used for

- tasks that run in the background,

- (as opposed to tasks you do during a live request from a user).

e.g.

-

Process images

When users upload images,

-

if you need to process each image

e.g.

- create copies of the image in different sizes for web, mobile, thumbnail previews…

-

you may want to do that in the background, rather than making the user wait for it.

To do that,

- Your frontend server

- stores the original image on a file server

- adds a message to a queue with the location of the image

- Later on, a separate consumer

- reads the message from the queue,

- downloads the image from the file server,

- processes the image, and

- when it’s done, deletes the message from the queue.

-

-

Encoding videos, sending email campaigns, delivering notifications, generating reports, and order processing.

Why use Message Queues

Using queues for asynchronous communication between services provides several key benefits:

-

Handle traffic spikes

A queue acts as a buffer between your services, which allows you to deal with spikes in traffic.

e.g.

-

If traffic from service A suddenly increased by 10x:

- With service A and B were communicating synchronously, then

- B might not be able to keep up with the load, and

- you’d have outages and lost messages.

- With the queue in between,

- service A can write as many messages as it wants, and

- service B can process them at whatever rate it can handle.

- With service A and B were communicating synchronously, then

-

-

Decoupling

-

With synchronous communication, every service needs to know the interface to talk to every other service.

-

In a large company,

- one service may use JSON over HTTP,

- a second may use Protocol Buffers over HTTP/2,

- a third may use gRPC,

- a fourth may work with one service discovery mechanism,

- a fifth doesn’t support service discovery, and

- a sixth may be part of a service mesh that requires mTLS.

Connecting all these disparate services together may be a massive undertaking.

-

-

With asynchronous communication via a message queue,

- each service solely needs to know how to talk to one thing, the API used by the message queue,

- so it gives you a decoupled, standardized mechanism for communication.

-

-

Guarantee tasks are completed

-

With synchronous communication,

If service A sends a message to service B, but never

- gets a response, or

- gets an error,

What do you do? Most synchronous code doesn’t handle those case at all, and just errors out.

- If this is during a live request from a user, the user might get a weird error message, which isn’t a great product experience.

- If this is during a task running in the background, the task might be lost entirely.

You could update your synchronous code with retry logic, but this might result in

- service B processing the message multiple times, or,

- if service B is overloaded, it might make the problem worse.

-

Using asynchronous communication with a message queue allows you to guarantee that

- each task is (eventually) completed,

- even in the face of outages, crashes, and other problems,

- as the queue persists message data and metadata (e.g., whether that message has been processed).

[!WARNING] Most message queues - a type of distributed systems - provide at least once delivery45, so:

- The consumers might receive a message more than once.

But you can write the consumers to be idempotent, so

- if the consumers see the same message more than once,

- it can handle it correctly.

-

-

Guarantee ordering and priority

Some message queues can guarantee

-

not only at least once delivery,

-

but also that messages are delivered in a specific order

e.g.

- Some queues can guarantee that messages are delivered in the order they were received, known as first-in, first out (FIFO)

- Some queues allow you to specify a priority for each message, guaranteeing messages with the highest priorities are delivered first (priority queues).

-

[!IMPORTANT] Key takeaway #9 Use message queues to run tasks in the background, with guarantees that tasks are

- completed

- executed in a specific order.

note

Message queues are used for

- one-to-one communication

- between a producer and a consumer

For one-to-many communication between a producer and many consumers, you need to use event streams.

Event Streams

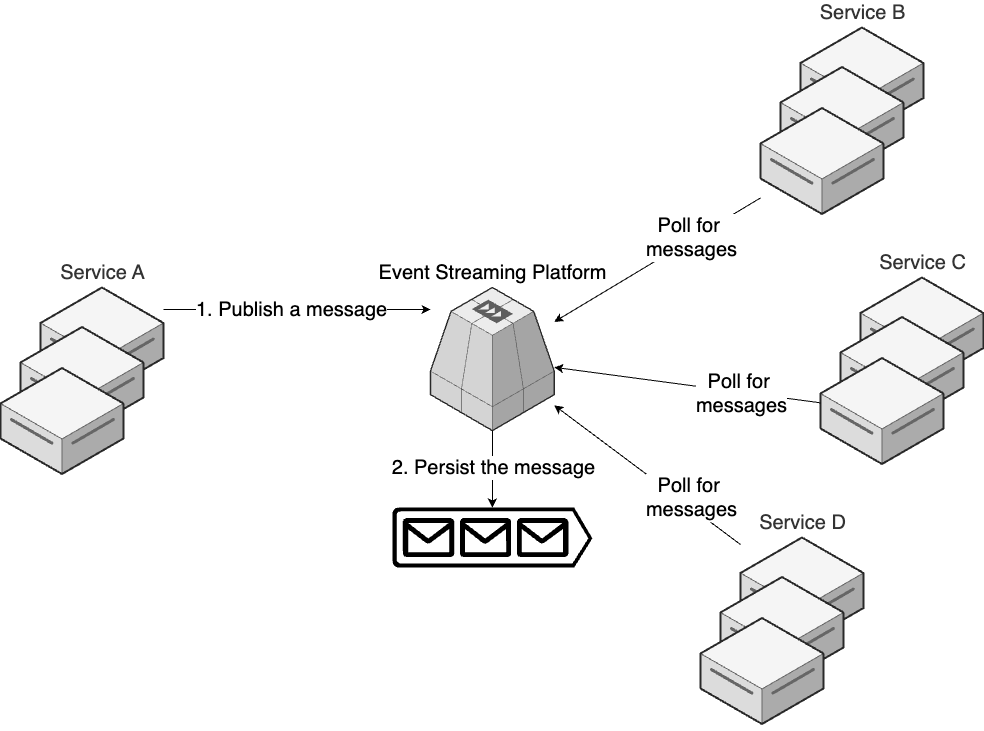

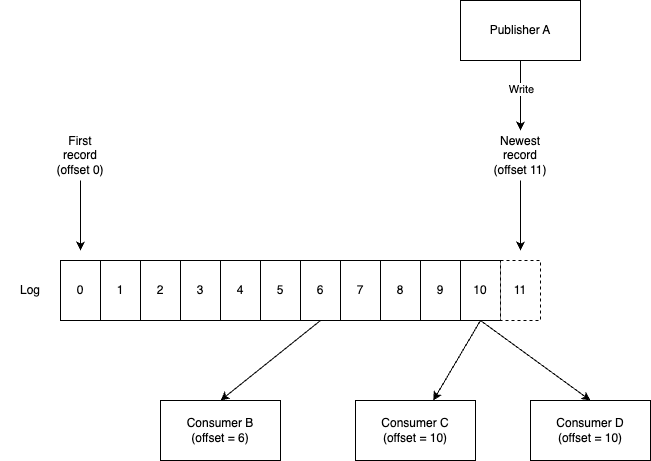

What is Event Stream